Surrogate Model Based Hyperparameter Tuning for Deep Learning with SPOT

Paper and Code

May 30, 2021

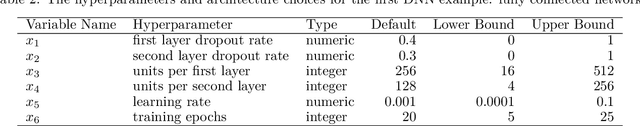

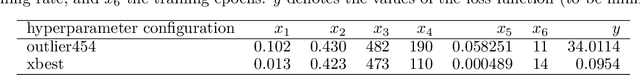

A surrogate model based hyperparameter tuning approach for deep learning is presented. This article demonstrates how the architecture-level parameters (hyperparameters) of deep learning models that were implemented in Keras/tensorflow can be optimized. The implementation of the tuning procedure is 100 % based on R, the software environment for statistical computing. With a few lines of code, existing R packages (tfruns and SPOT) can be combined to perform hyperparameter tuning. An elementary hyperparameter tuning task (neural network and the MNIST data) is used to exemplify this approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge