Surpassing legacy approaches and human intelligence with hybrid single- and multi-objective Reinforcement Learning-based optimization and interpretable AI to enable the economic operation of the US nuclear fleet

Paper and Code

Feb 16, 2024

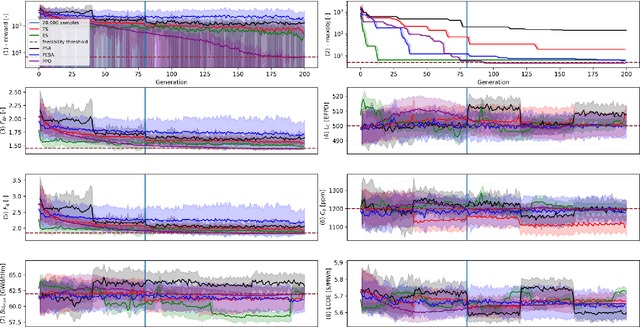

The nuclear sector represents the primary source of carbon-free energy in the United States. Nevertheless, existing nuclear power plants face the threat of early shutdowns due to their inability to compete economically against alternatives such as gas power plants. Optimizing the fuel cycle cost through the optimization of core loading patterns is one approach to addressing this lack of competitiveness. However, this optimization task involves multiple objectives and constraints, resulting in a vast number of candidate solutions that cannot be explicitly solved. While stochastic optimization (SO) methodologies are utilized by various nuclear utilities and vendors for fuel cycle reload design, manual design remains the preferred approach. To advance the state-of-the-art in core reload patterns, we have developed methods based on Deep Reinforcement Learning. Previous research has laid the groundwork for this approach and demonstrated its ability to discover high-quality patterns within a reasonable timeframe. However, there is a need for comparison against legacy methods to demonstrate its utility in a single-objective setting. While RL methods have shown superiority in multi-objective settings, they have not yet been applied to address the competitiveness issue effectively. In this paper, we rigorously compare our RL-based approach against the most commonly used SO-based methods, namely Genetic Algorithm (GA), Simulated Annealing (SA), and Tabu Search (TS). Subsequently, we introduce a new hybrid paradigm to devise innovative designs, resulting in economic gains ranging from 2.8 to 3.3 million dollars per year per plant. This development leverages interpretable AI, enabling improved algorithmic efficiency by making black-box optimizations interpretable. Future work will focus on scaling this method to address a broader range of core designs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge