Style-Restricted GAN: Multi-Modal Translation with Style Restriction Using Generative Adversarial Networks

Paper and Code

May 17, 2021

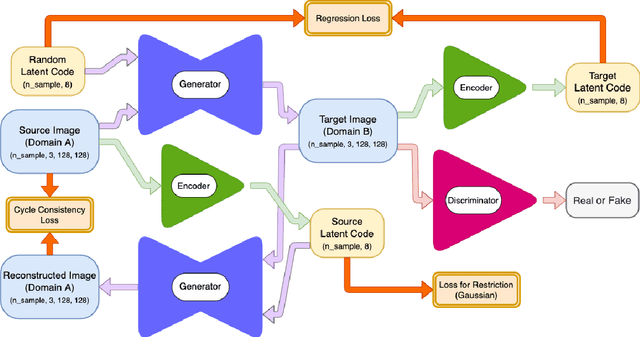

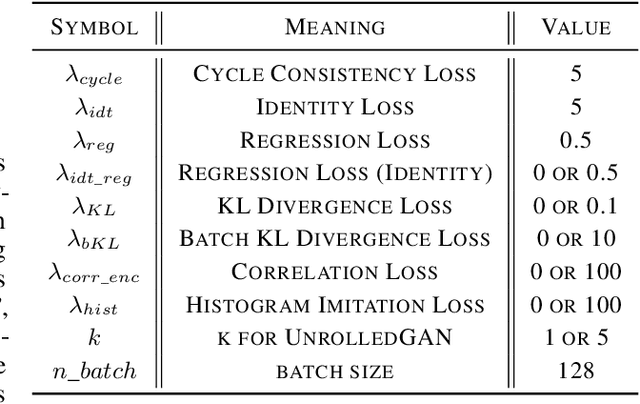

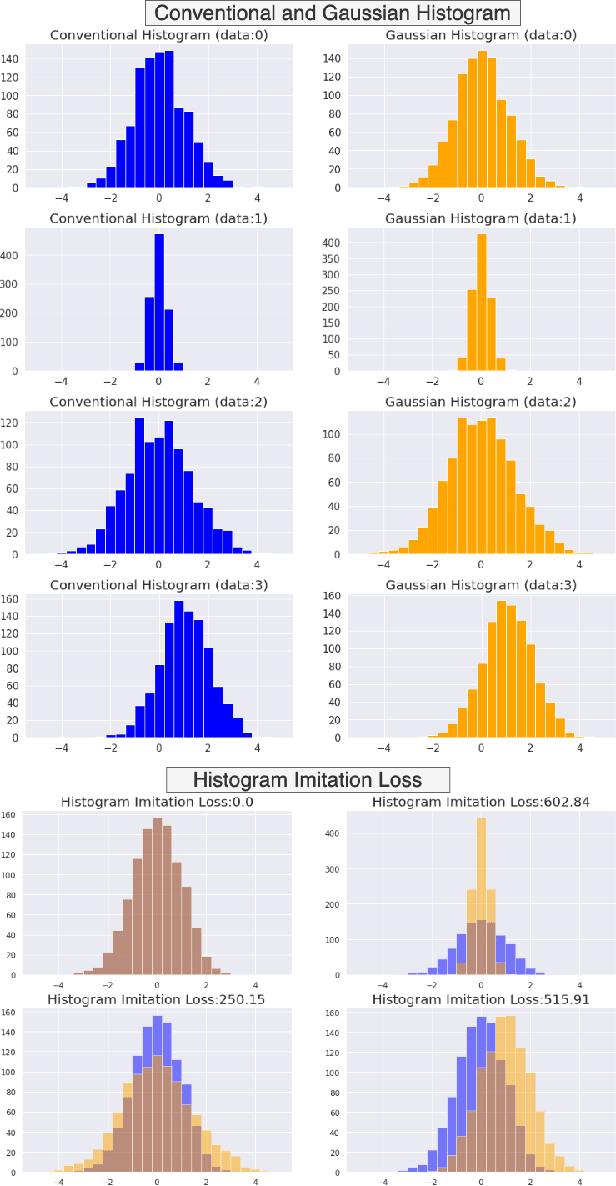

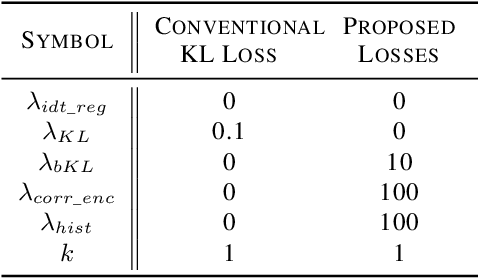

Unpaired image-to-image translation using Generative Adversarial Networks (GAN) is successful in converting images among multiple domains. Moreover, recent studies have shown a way to diversify the outputs of the generator. However, since there are no restrictions on how the generator diversifies the results, it is likely to translate some unexpected features. In this paper, we propose Style-Restricted GAN (SRGAN), a novel approach to transfer input images into different domains' with different styles, changing the exclusively class-related features. Additionally, instead of KL divergence loss, we adopt 3 new losses to restrict the distribution of the encoded features: batch KL divergence loss, correlation loss, and histogram imitation loss. The study reports quantitative as well as qualitative results with Precision, Recall, Density, and Coverage. The proposed 3 losses lead to the enhancement of the level of diversity compared to the conventional KL loss. In particular, SRGAN is found to be successful in translating with higher diversity and without changing the class-unrelated features in the CelebA face dataset. Our implementation is available at https://github.com/shinshoji01/Style-Restricted_GAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge