Stopping Criterion Design for Recursive Bayesian Classification: Analysis and Decision Geometry

Paper and Code

Jul 30, 2020

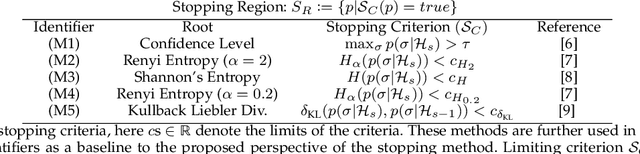

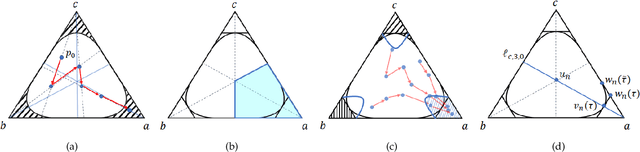

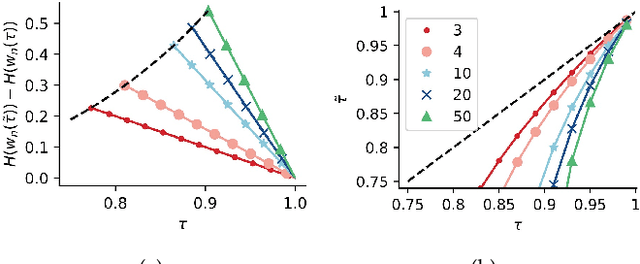

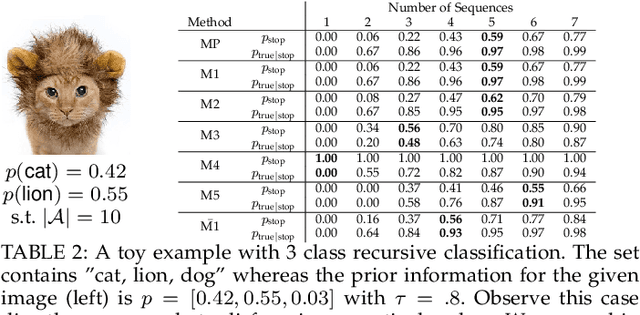

Systems that are based on recursive Bayesian updates for classification limit the cost of evidence collection through certain stopping/termination criteria and accordingly enforce decision making. Conventionally, two termination criteria based on pre-defined thresholds over (i) the maximum of the state posterior distribution; and (ii) the state posterior uncertainty are commonly used. In this paper, we propose a geometric interpretation over the state posterior progression and accordingly we provide a point-by-point analysis over the disadvantages of using such conventional termination criteria. For example, through the proposed geometric interpretation we show that confidence thresholds defined over maximum of the state posteriors suffer from stiffness that results in unnecessary evidence collection whereas uncertainty based thresholding methods are fragile to number of categories and terminate prematurely if some state candidates are already discovered to be unfavorable. Moreover, both types of termination methods neglect the evolution of posterior updates. We then propose a new stopping/termination criterion with a geometrical insight to overcome the limitations of these conventional methods and provide a comparison in terms of decision accuracy and speed. We validate our claims using simulations and using real experimental data obtained through a brain computer interfaced typing system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge