Stochastic gradient descent on Riemannian manifolds

Paper and Code

Nov 19, 2013

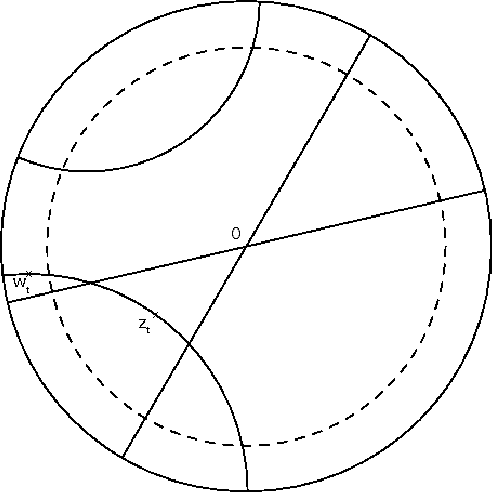

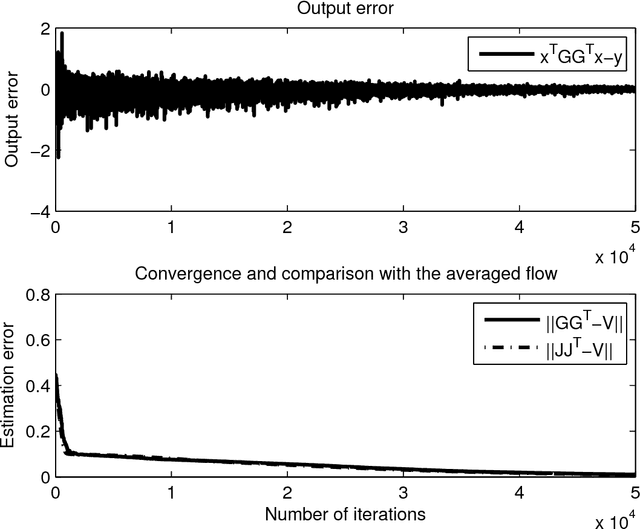

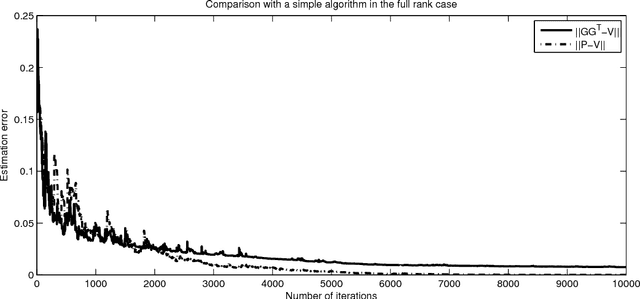

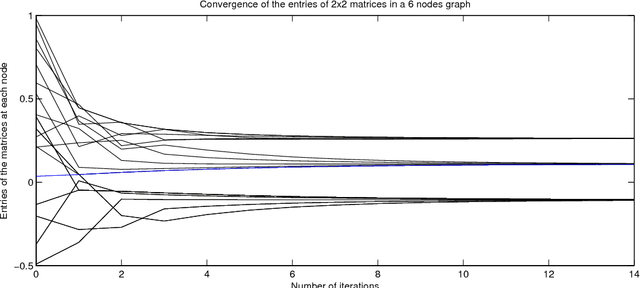

Stochastic gradient descent is a simple approach to find the local minima of a cost function whose evaluations are corrupted by noise. In this paper, we develop a procedure extending stochastic gradient descent algorithms to the case where the function is defined on a Riemannian manifold. We prove that, as in the Euclidian case, the gradient descent algorithm converges to a critical point of the cost function. The algorithm has numerous potential applications, and is illustrated here by four examples. In particular a novel gossip algorithm on the set of covariance matrices is derived and tested numerically.

* IEEE Transactions on Automatic Control, Vol 58 (9), pages 2217 -

2229, Sept 2013 * A slightly shorter version has been published in IEEE Transactions

Automatic Control

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge