Stein Variational Gradient Descent: many-particle and long-time asymptotics

Paper and Code

Feb 25, 2021

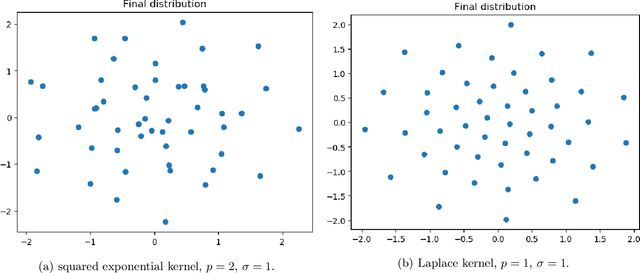

Stein variational gradient descent (SVGD) refers to a class of methods for Bayesian inference based on interacting particle systems. In this paper, we consider the originally proposed deterministic dynamics as well as a stochastic variant, each of which represent one of the two main paradigms in Bayesian computational statistics: variational inference and Markov chain Monte Carlo. As it turns out, these are tightly linked through a correspondence between gradient flow structures and large-deviation principles rooted in statistical physics. To expose this relationship, we develop the cotangent space construction for the Stein geometry, prove its basic properties, and determine the large-deviation functional governing the many-particle limit for the empirical measure. Moreover, we identify the Stein-Fisher information (or kernelised Stein discrepancy) as its leading order contribution in the long-time and many-particle regime in the sense of $\Gamma$-convergence, shedding some light on the finite-particle properties of SVGD. Finally, we establish a comparison principle between the Stein-Fisher information and RKHS-norms that might be of independent interest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge