State-of-the-art Techniques in Deep Edge Intelligence

Paper and Code

Aug 04, 2020

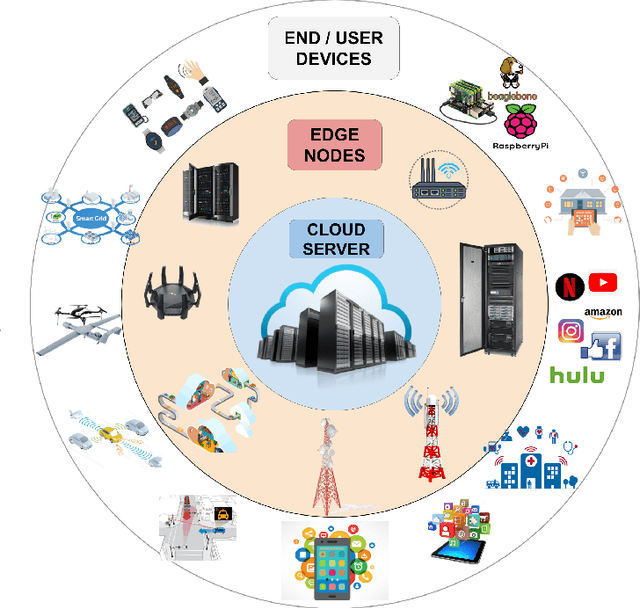

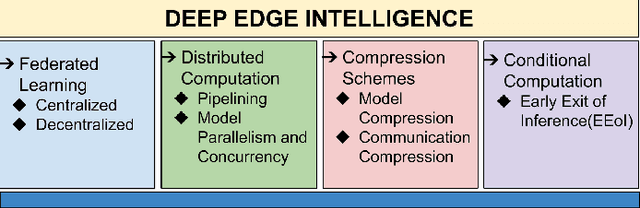

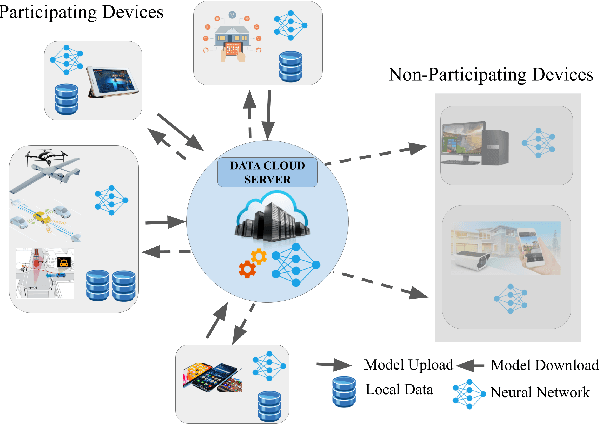

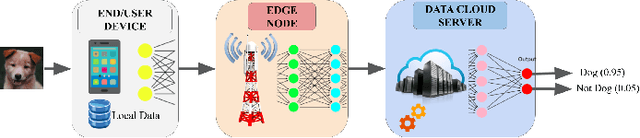

The potential held by the gargantuan volumes of data being generated across networks worldwide has been truly unlocked by machine learning techniques and more recently Deep Learning. The advantages offered by the latter have seen it rapidly becoming a framework of choice for various applications. However, the centralization of computational resources and the need for data aggregation have long been limiting factors in the democratization of Deep Learning applications. Edge Computing is an emerging paradigm that aims to utilize the hitherto untapped processing resources available at the network periphery. Edge Intelligence (EI) has quickly emerged as a powerful alternative to enable learning using the concepts of Edge Computing. Deep Learning-based Edge Intelligence or Deep Edge Intelligence (DEI) lies in this rapidly evolving domain. In this article, we provide an overview of the major constraints in operationalizing DEI. The major research avenues in DEI have been consolidated under Federated Learning, Distributed Computation, Compression Schemes and Conditional Computation. We also present some of the prevalent challenges and highlight prospective research avenues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge