State Of The Art In Open-Set Iris Presentation Attack Detection

Paper and Code

Aug 22, 2022

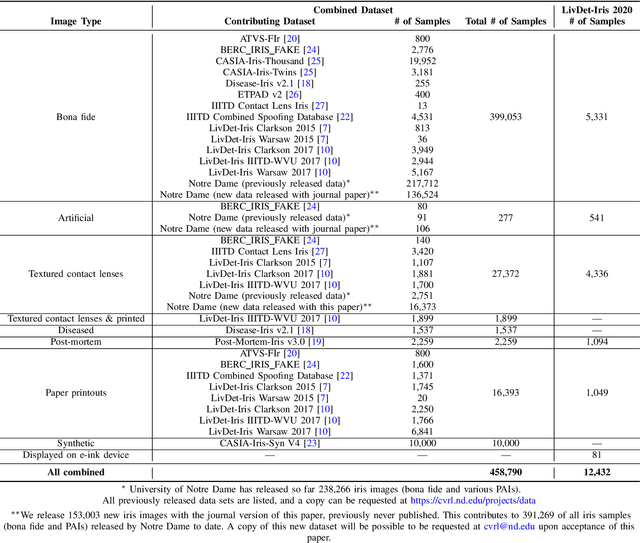

Research in presentation attack detection (PAD) for iris recognition has largely moved beyond evaluation in "closed-set" scenarios, to emphasize ability to generalize to presentation attack types not present in the training data. This paper offers several contributions to understand and extend the state-of-the-art in open-set iris PAD. First, it describes the most authoritative evaluation to date of iris PAD. We have curated the largest publicly-available image dataset for this problem, drawing from 26 benchmarks previously released by various groups, and adding 150,000 images being released with the journal version of this paper, to create a set of 450,000 images representing authentic iris and seven types of presentation attack instrument (PAI). We formulate a leave-one-PAI-out evaluation protocol, and show that even the best algorithms in the closed-set evaluations exhibit catastrophic failures on multiple attack types in the open-set scenario. This includes algorithms performing well in the most recent LivDet-Iris 2020 competition, which may come from the fact that the LivDet-Iris protocol emphasizes sequestered images rather than unseen attack types. Second, we evaluate the accuracy of five open-source iris presentation attack algorithms available today, one of which is newly-proposed in this paper, and build an ensemble method that beats the winner of the LivDet-Iris 2020 by a substantial margin. This paper demonstrates that closed-set iris PAD, when all PAIs are known during training, is a solved problem, with multiple algorithms showing very high accuracy, while open-set iris PAD, when evaluated correctly, is far from being solved. The newly-created dataset, new open-source algorithms, and evaluation protocol, made publicly available with the journal version of this paper, provide the experimental artifacts that researchers can use to measure progress on this important problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge