Split Modeling for High-Dimensional Logistic Regression

Paper and Code

Mar 12, 2021

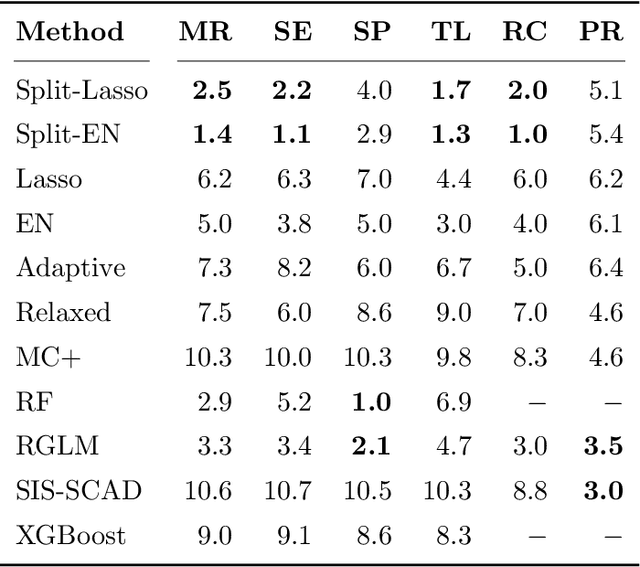

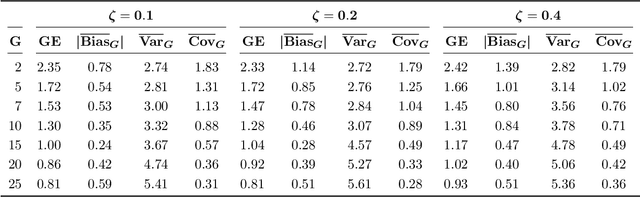

A novel method is proposed to learn an ensemble of logistic classification models in the context of high-dimensional binary classification. The models in the ensemble are built simultaneously by optimizing a multi-convex objective function. To enforce diversity between the models the objective function penalizes overlap between the models in the ensemble. We study the bias and variance of the individual models as well as their correlation and discuss how our method learns the ensemble by exploiting the accuracy-diversity trade-off for ensemble models. In contrast to other ensembling approaches, the resulting ensemble model is fully interpretable as a logistic regression model and at the same time yields excellent prediction accuracy as demonstrated in an extensive simulation study and gene expression data applications. An open-source compiled software library implementing the proposed method is briefly discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge