Spatio-Temporal Representation with Deep Neural Recurrent Network in MIMO CSI Feedback

Paper and Code

Aug 22, 2019

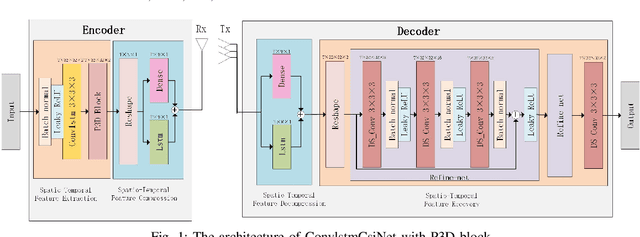

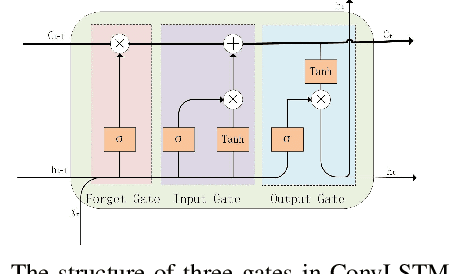

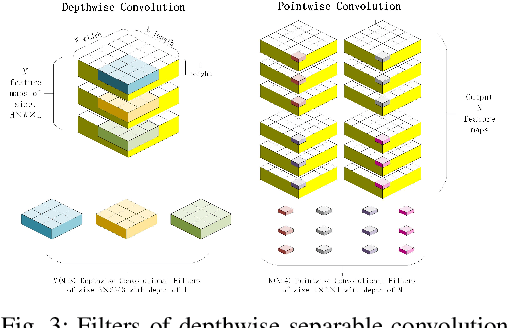

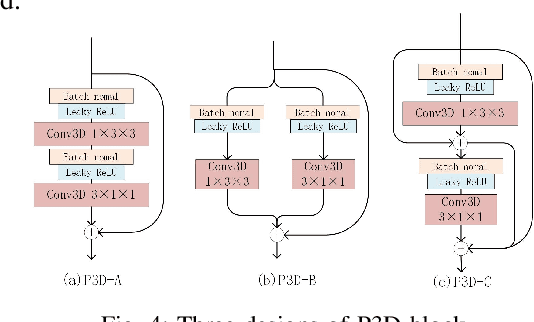

In multiple-input multiple-output (MIMO) systems, it is crucial of utilizing the available channel state information (CSI) at the transmitter for precoding to improve the performance of frequency division duplex (FDD) networks. One of the mainchallenges is to compress a large amount of CSI in CSI feedback transmission in massive MIMO systems. In this paper, we propose a deep learning (DL)-based approach that uses a deep recurrent neural network (RNN) to learn temporal correlation and adopts depthwise separable convolution to shrink the model. The feature extraction module is also elaborately devised by studyingdecoupled spatio-temporal feature representations in different structures. Experimental results demonstrate that the proposed approach outperforms existing DL-based methods in terms of recovery quality and accuracy, which can also achieve remarkable robustness at low compression ratio (CR).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge