Spatial machine-learning model diagnostics: a model-agnostic distance-based approach

Paper and Code

Nov 13, 2021

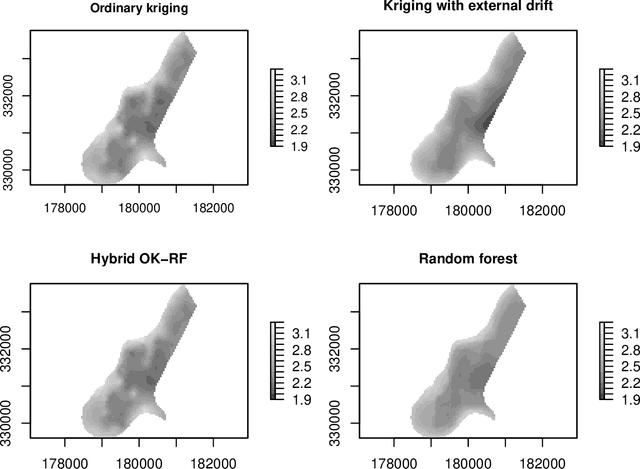

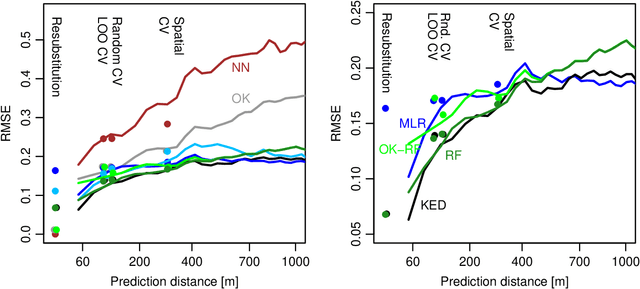

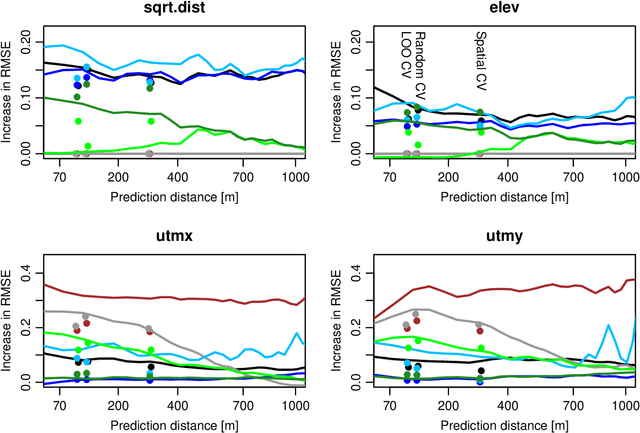

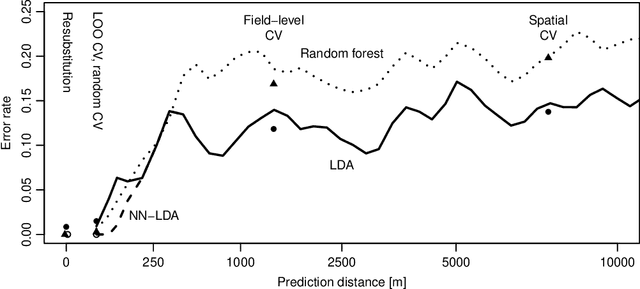

While significant progress has been made towards explaining black-box machine-learning (ML) models, there is still a distinct lack of diagnostic tools that elucidate the spatial behaviour of ML models in terms of predictive skill and variable importance. This contribution proposes spatial prediction error profiles (SPEPs) and spatial variable importance profiles (SVIPs) as novel model-agnostic assessment and interpretation tools for spatial prediction models with a focus on prediction distance. Their suitability is demonstrated in two case studies representing a regionalization task in an environmental-science context, and a classification task from remotely-sensed land cover classification. In these case studies, the SPEPs and SVIPs of geostatistical methods, linear models, random forest, and hybrid algorithms show striking differences but also relevant similarities. Limitations of related cross-validation techniques are outlined, and the case is made that modelers should focus their model assessment and interpretation on the intended spatial prediction horizon. The range of autocorrelation, in contrast, is not a suitable criterion for defining spatial cross-validation test sets. The novel diagnostic tools enrich the toolkit of spatial data science, and may improve ML model interpretation, selection, and design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge