Sparsity-aware neural user behavior modeling in online interaction platforms

Paper and Code

Feb 28, 2022

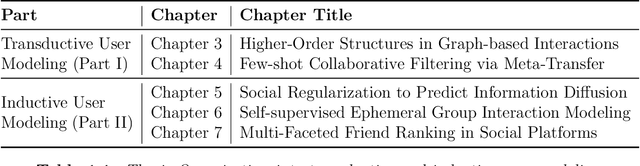

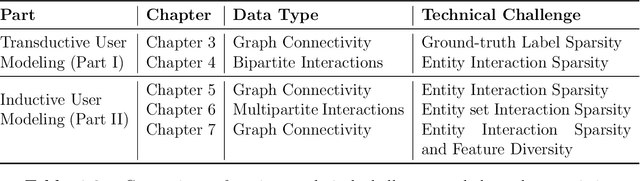

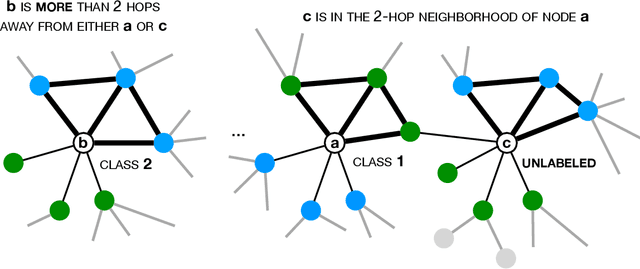

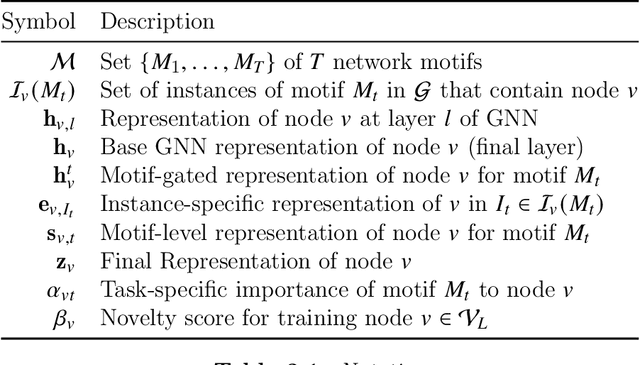

Modern online platforms offer users an opportunity to participate in a variety of content-creation, social networking, and shopping activities. With the rapid proliferation of such online services, learning data-driven user behavior models is indispensable to enable personalized user experiences. Recently, representation learning has emerged as an effective strategy for user modeling, powered by neural networks trained over large volumes of interaction data. Despite their enormous potential, we encounter the unique challenge of data sparsity for a vast majority of entities, e.g., sparsity in ground-truth labels for entities and in entity-level interactions (cold-start users, items in the long-tail, and ephemeral groups). In this dissertation, we develop generalizable neural representation learning frameworks for user behavior modeling designed to address different sparsity challenges across applications. Our problem settings span transductive and inductive learning scenarios, where transductive learning models entities seen during training and inductive learning targets entities that are only observed during inference. We leverage different facets of information reflecting user behavior (e.g., interconnectivity in social networks, temporal and attributed interaction information) to enable personalized inference at scale. Our proposed models are complementary to concurrent advances in neural architectural choices and are adaptive to the rapid addition of new applications in online platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge