Space-Invariant Projection in Streaming Network Embedding

Paper and Code

Mar 11, 2023

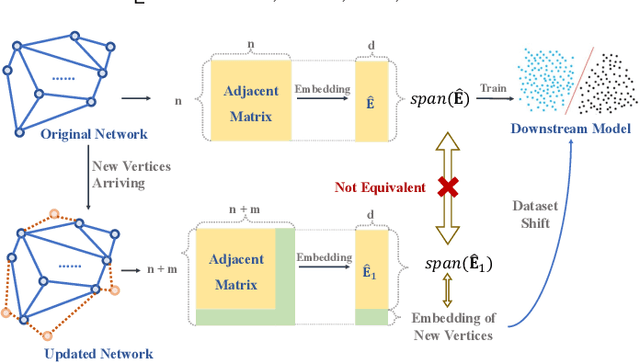

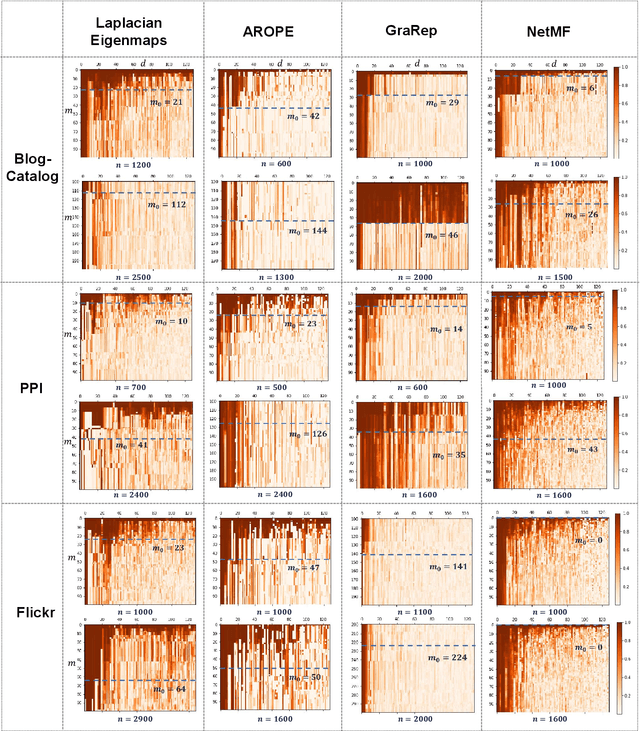

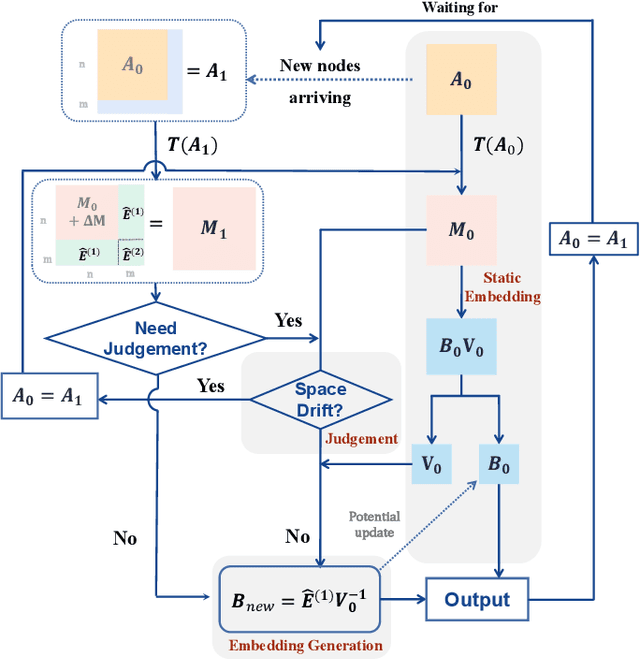

Newly arriving nodes in dynamics networks would gradually make the node embedding space drifted and the retraining of node embedding and downstream models indispensable. An exact threshold size of these new nodes, below which the node embedding space will be predicatively maintained, however, is rarely considered in either theory or experiment. From the view of matrix perturbation theory, a threshold of the maximum number of new nodes that keep the node embedding space approximately equivalent is analytically provided and empirically validated. It is therefore theoretically guaranteed that as the size of newly arriving nodes is below this threshold, embeddings of these new nodes can be quickly derived from embeddings of original nodes. A generation framework, Space-Invariant Projection (SIP), is accordingly proposed to enables arbitrary static MF-based embedding schemes to embed new nodes in dynamics networks fast. The time complexity of SIP is linear with the network size. By combining SIP with four state-of-the-art MF-based schemes, we show that SIP exhibits not only wide adaptability but also strong empirical performance in terms of efficiency and efficacy on the node classification task in three real datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge