SoK: Privacy Preserving Machine Learning using Functional Encryption: Opportunities and Challenges

Paper and Code

Apr 11, 2022

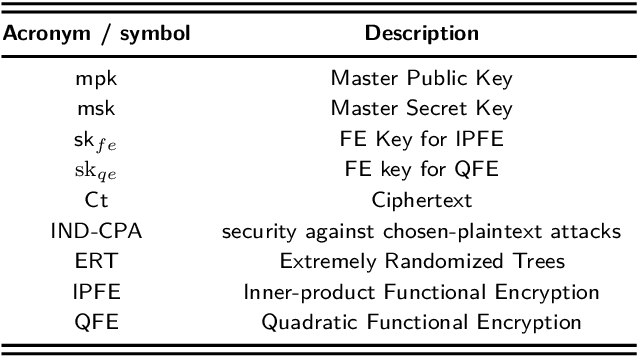

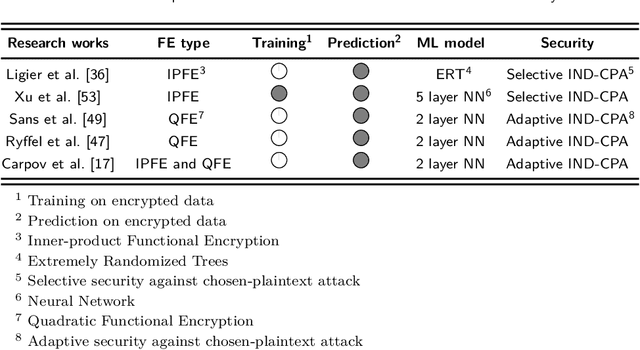

With the advent of functional encryption, new possibilities for computation on encrypted data have arisen. Functional Encryption enables data owners to grant third-party access to perform specified computations without disclosing their inputs. It also provides computation results in plain, unlike Fully Homomorphic Encryption. The ubiquitousness of machine learning has led to the collection of massive private data in the cloud computing environment. This raises potential privacy issues and the need for more private and secure computing solutions. Numerous efforts have been made in privacy-preserving machine learning (PPML) to address security and privacy concerns. There are approaches based on fully homomorphic encryption (FHE), secure multiparty computation (SMC), and, more recently, functional encryption (FE). However, FE-based PPML is still in its infancy and has not yet gotten much attention compared to FHE-based PPML approaches. In this paper, we provide a systematization of PPML works based on FE summarizing state-of-the-art in the literature. We focus on Inner-product-FE and Quadratic-FE-based machine learning models for the PPML applications. We analyze the performance and usability of the available FE libraries and their applications to PPML. We also discuss potential directions for FE-based PPML approaches. To the best of our knowledge, this is the first work to systematize FE-based PPML approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge