Soft Hierarchical Graph Recurrent Networks for Many-Agent Partially Observable Environments

Paper and Code

Sep 05, 2021

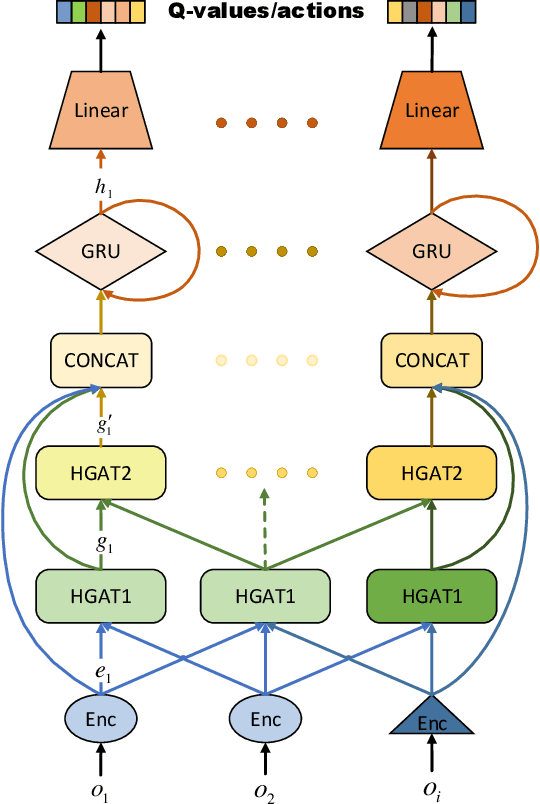

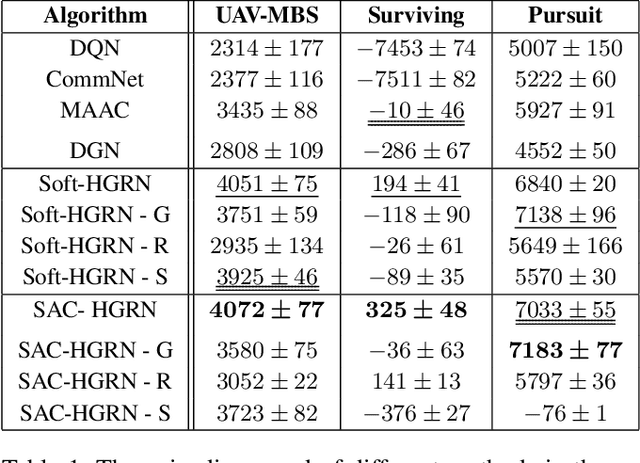

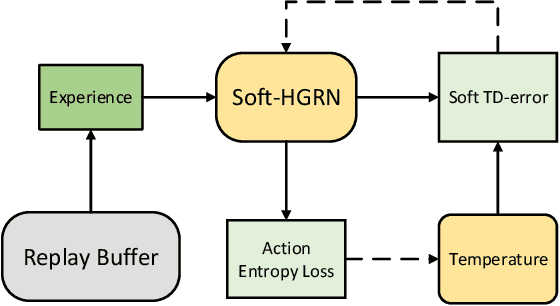

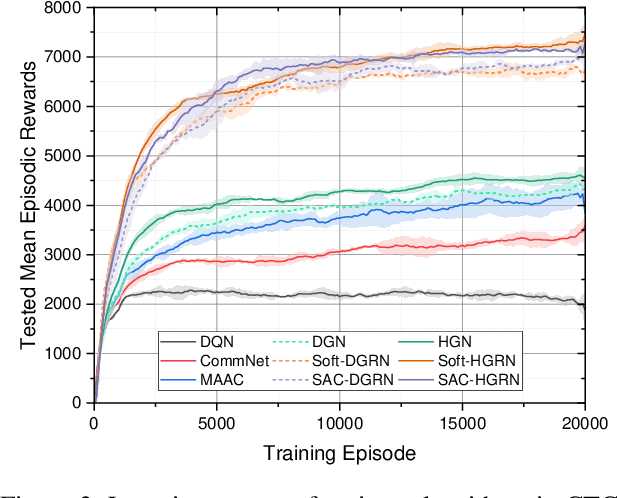

The recent progress in multi-agent deep reinforcement learning(MADRL) makes it more practical in real-world tasks, but its relatively poor scalability and the partially observable constraints raise challenges to its performance and deployment. Based on our intuitive observation that the human society could be regarded as a large-scale partially observable environment, where each individual has the function of communicating with neighbors and remembering its own experience, we propose a novel network structure called hierarchical graph recurrent network(HGRN) for multi-agent cooperation under partial observability. Specifically, we construct the multi-agent system as a graph, use the hierarchical graph attention network(HGAT) to achieve communication between neighboring agents, and exploit GRU to enable agents to record historical information. To encourage exploration and improve robustness, we design a maximum-entropy learning method to learn stochastic policies of a configurable target action entropy. Based on the above technologies, we proposed a value-based MADRL algorithm called Soft-HGRN and its actor-critic variant named SAC-HRGN. Experimental results based on three homogeneous tasks and one heterogeneous environment not only show that our approach achieves clear improvements compared with four baselines, but also demonstrates the interpretability, scalability, and transferability of the proposed model. Ablation studies prove the function and necessity of each component.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge