SnapE -- Training Snapshot Ensembles of Link Prediction Models

Paper and Code

Aug 05, 2024

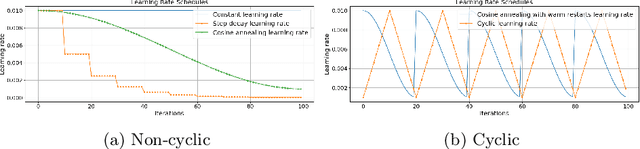

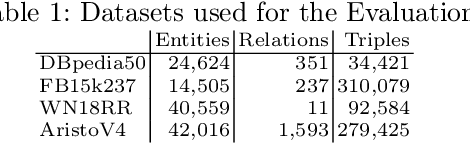

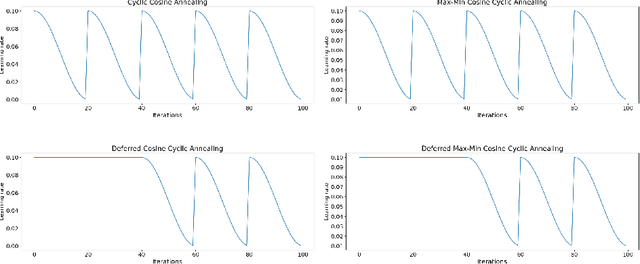

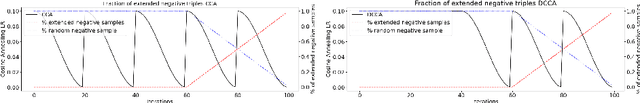

Snapshot ensembles have been widely used in various fields of prediction. They allow for training an ensemble of prediction models at the cost of training a single one. They are known to yield more robust predictions by creating a set of diverse base models. In this paper, we introduce an approach to transfer the idea of snapshot ensembles to link prediction models in knowledge graphs. Moreover, since link prediction in knowledge graphs is a setup without explicit negative examples, we propose a novel training loop that iteratively creates negative examples using previous snapshot models. An evaluation with four base models across four datasets shows that this approach constantly outperforms the single model approach, while keeping the training time constant.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge