Simultaneous Face Hallucination and Translation for Thermal to Visible Face Verification using Axial-GAN

Paper and Code

Apr 13, 2021

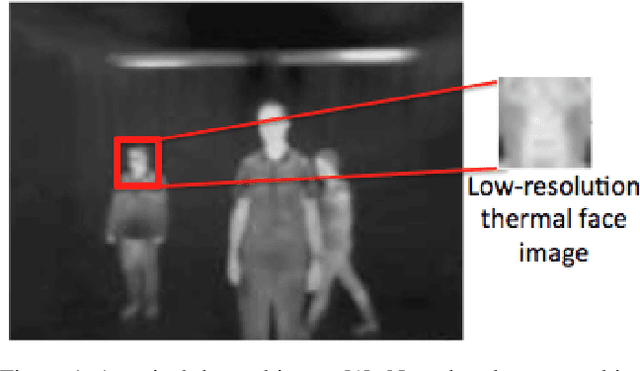

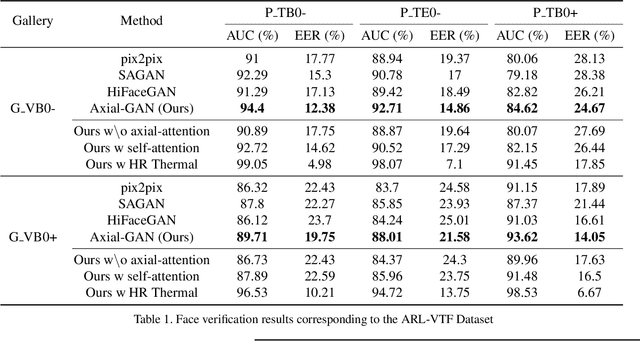

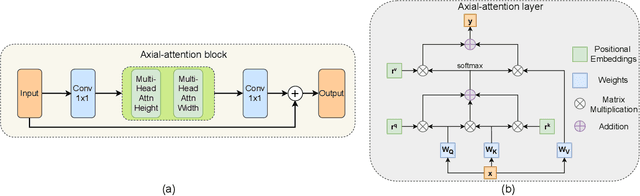

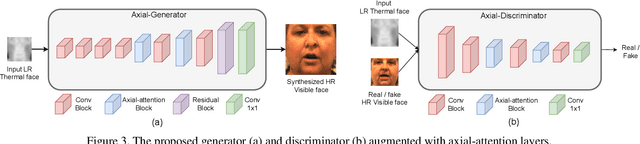

Existing thermal-to-visible face verification approaches expect the thermal and visible face images to be of similar resolution. This is unlikely in real-world long-range surveillance systems, since humans are distant from the cameras. To address this issue, we introduce the task of thermal-to-visible face verification from low-resolution thermal images. Furthermore, we propose Axial-Generative Adversarial Network (Axial-GAN) to synthesize high-resolution visible images for matching. In the proposed approach we augment the GAN framework with axial-attention layers which leverage the recent advances in transformers for modelling long-range dependencies. We demonstrate the effectiveness of the proposed method by evaluating on two different thermal-visible face datasets. When compared to related state-of-the-art works, our results show significant improvements in both image quality and face verification performance, and are also much more efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge