Simpler is better: spectral regularization and up-sampling techniques for variational autoencoders

Paper and Code

Jan 19, 2022

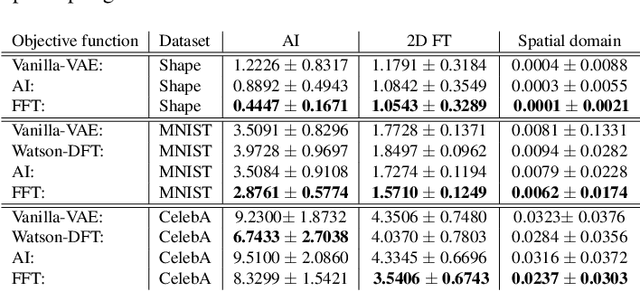

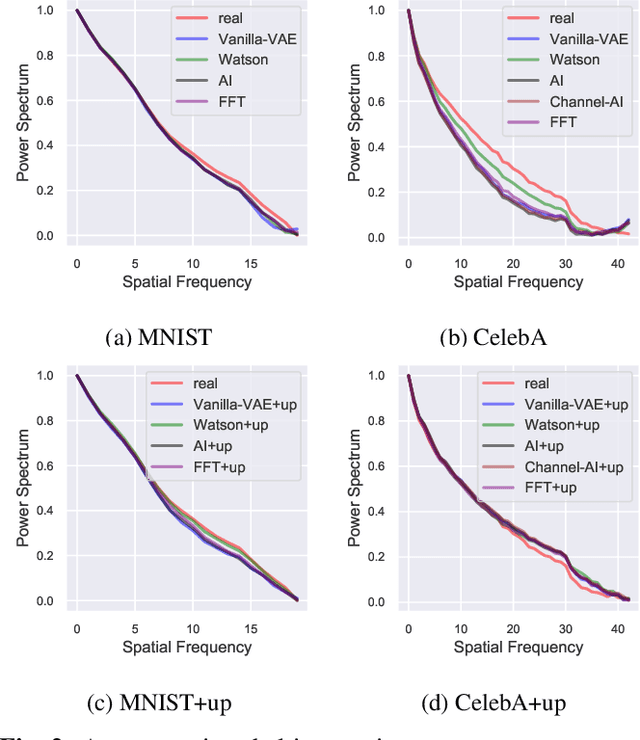

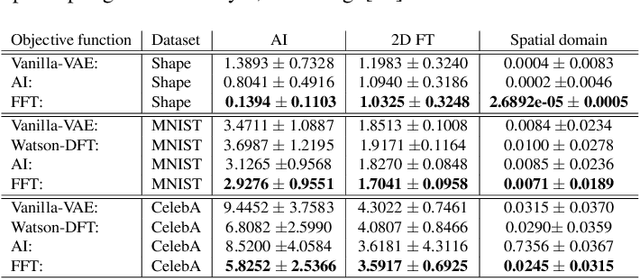

Full characterization of the spectral behavior of generative models based on neural networks remains an open issue. Recent research has focused heavily on generative adversarial networks and the high-frequency discrepancies between real and generated images. The current solution to avoid this is to either replace transposed convolutions with bilinear up-sampling or add a spectral regularization term in the generator. It is well known that Variational Autoencoders (VAEs) also suffer from these issues. In this work, we propose a simple 2D Fourier transform-based spectral regularization loss for the VAE and show that it can achieve results equal to, or better than, the current state-of-the-art in frequency-aware losses for generative models. In addition, we experiment with altering the up-sampling procedure in the generator network and investigate how it influences the spectral performance of the model. We include experiments on synthetic and real data sets to demonstrate our results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge