Separating Novel Features for Logical Anomaly Detection: A Straightforward yet Effective Approach

Paper and Code

Jul 25, 2024

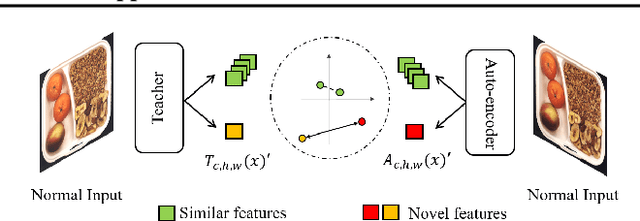

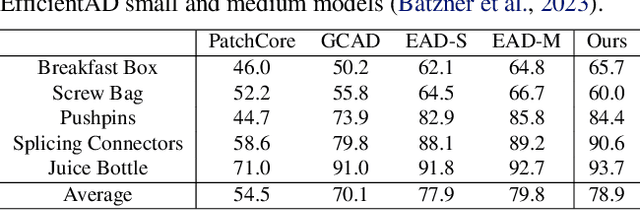

Vision-based inspection algorithms have significantly contributed to quality control in industrial settings, particularly in addressing structural defects like dent and contamination which are prevalent in mass production. Extensive research efforts have led to the development of related benchmarks such as MVTec AD (Bergmann et al., 2019). However, in industrial settings, there can be instances of logical defects, where acceptable items are found in unsuitable locations or product pairs do not match as expected. Recent methods tackling logical defects effectively employ knowledge distillation to generate difference maps. Knowledge distillation (KD) is used to learn normal data distribution in unsupervised manner. Despite their effectiveness, these methods often overlook the potential false negatives. Excessive similarity between the teacher network and student network can hinder the generation of a suitable difference map for logical anomaly detection. This technical report provides insights on handling potential false negatives by utilizing a simple constraint in KD-based logical anomaly detection methods. We select EfficientAD as a state-of-the-art baseline and apply a margin-based constraint to its unsupervised learning scheme. Applying this constraint, we can improve the AUROC for MVTec LOCO AD by 1.3 %.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge