Semi-Supervised Learning with Self-Supervised Networks

Paper and Code

Jun 25, 2019

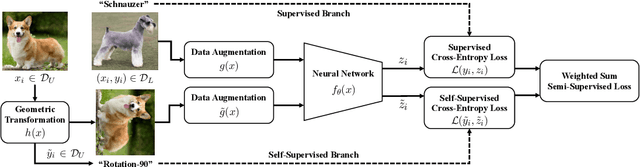

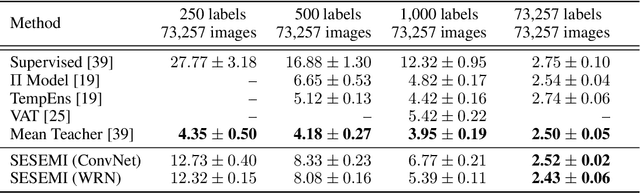

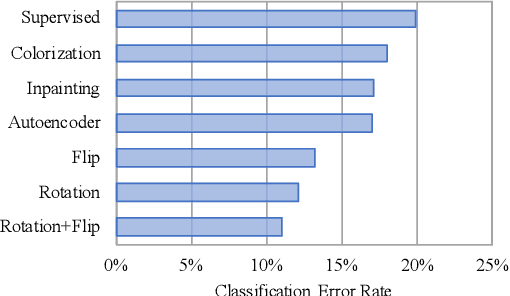

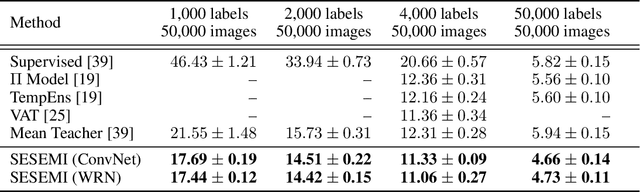

Recent advances in semi-supervised learning have shown tremendous potential in overcoming a major barrier to the success of modern machine learning algorithms: access to vast amounts of human-labeled training data. Algorithms based on self-ensemble learning and virtual adversarial training can harness the abundance of unlabeled data to produce impressive state-of-the-art results on a number of semi-supervised benchmarks, approaching the performance of strong supervised baselines using only a fraction of the available labeled data. However, these methods often require careful tuning of many hyper-parameters and are usually not easy to implement in practice. In this work, we present a conceptually simple yet effective semi-supervised algorithm based on self-supervised learning to combine semantic feature representations from unlabeled data. Our models are efficiently trained end-to-end for the joint, multi-task learning of labeled and unlabeled data in a single stage. Striving for simplicity and practicality, our approach requires no additional hyper-parameters to tune for optimal performance beyond the standard set for training convolutional neural networks. We conduct a comprehensive empirical evaluation of our models for semi-supervised image classification on SVHN, CIFAR-10 and CIFAR-100, and demonstrate results competitive with, and in some cases exceeding, prior state of the art. Reference code and data are available at https://github.com/vuptran/sesemi

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge