Semantic filtering through deep source separation on microscopy images

Paper and Code

Sep 02, 2019

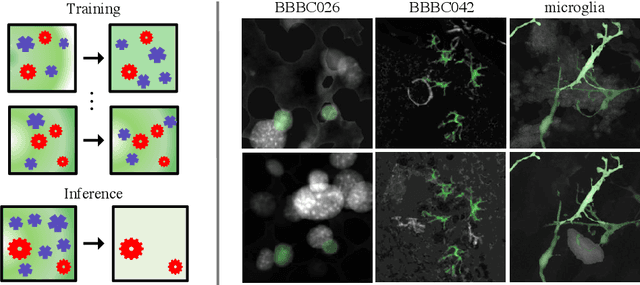

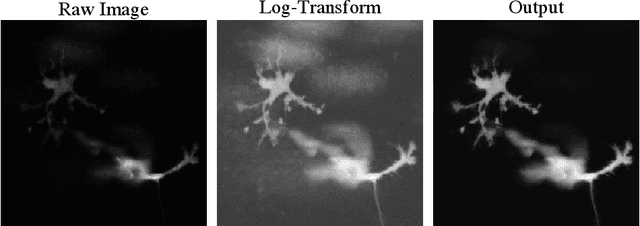

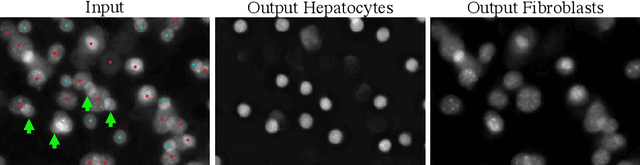

By their very nature microscopy images of cells and tissues consist of a limited number of object types or components. In contrast to most natural scenes, the composition is known a priori. Decomposing biological images into semantically meaningful objects and layers is the aim of this paper. Building on recent approaches to image de-noising we present a framework that achieves state-of-the-art segmentation results requiring little or no manual annotations. Here, synthetic images generated by adding cell crops are sufficient to train the model. Extensive experiments on cellular images, a histology data set, and small animal videos demonstrate that our approach generalizes to a broad range of experimental settings. As the proposed methodology does not require densely labelled training images and is capable of resolving the partially overlapping objects it holds the promise of being of use in a number of different applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge