Self-supervised asymmetric deep hashing with margin-scalable constraint for image retrieval

Paper and Code

Dec 07, 2020

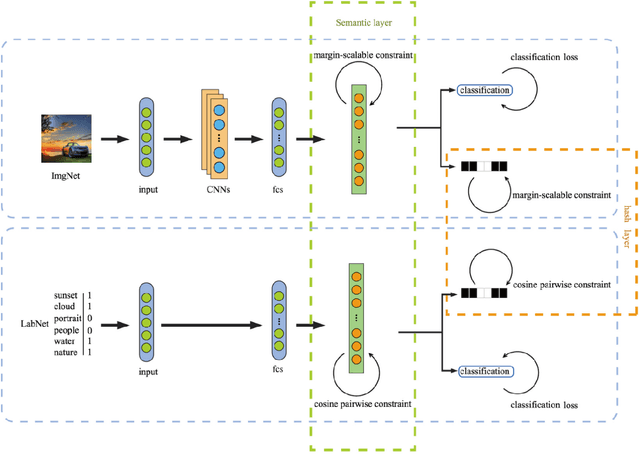

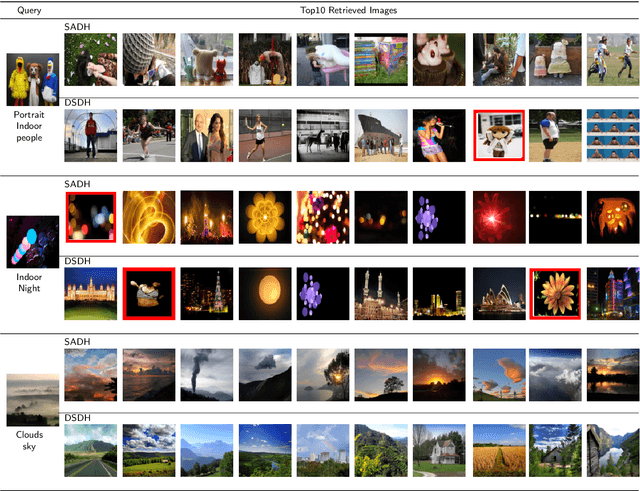

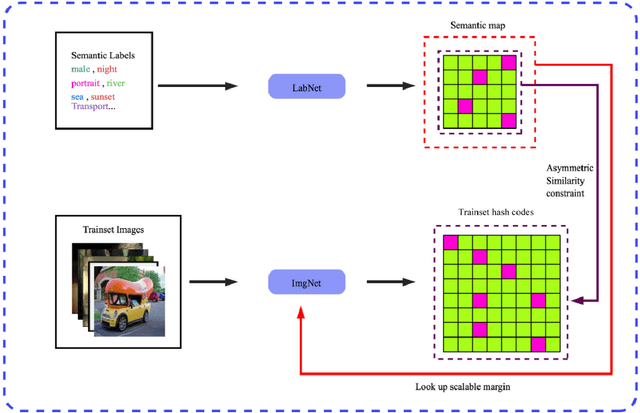

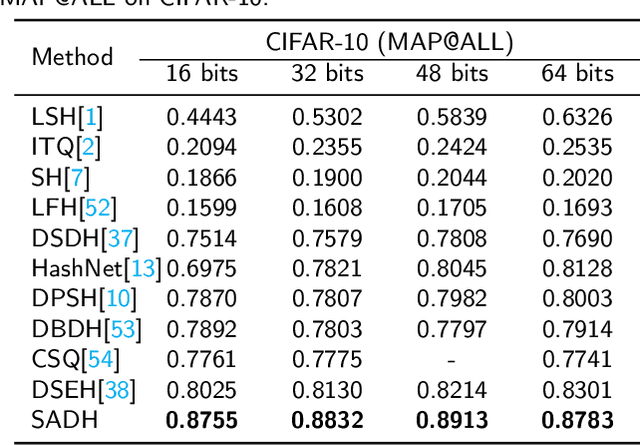

Due to its validity and rapidity, image retrieval based on deep hashing approaches is widely concerned especially in large-scale visual search. However, many existing deep hashing methods inadequately utilize label information as guidance of feature learning network without more advanced exploration in semantic space, besides the similarity correlations in hamming space are not fully discovered and embedded into hash codes, by which the retrieval quality is diminished with inefficient preservation of pairwise correlations and multi-label semantics. To cope with these problems, we propose a novel self-supervised asymmetric deep hashing with margin-scalable constraint(SADH) approach for image retrieval. SADH implements a self-supervised network to preserve supreme semantic information in a semantic feature map and a semantic code map for each semantics of the given dataset, which efficiently-and-precisely guides a feature learning network to preserve multi-label semantic information with asymmetric learning strategy. Moreover, for the feature learning part, by further exploiting semantic maps, a new margin-scalable constraint is employed for both highly-accurate construction of pairwise correlation in the hamming space and more discriminative hash code representation. Extensive empirical research on three benchmark datasets validate that the proposed method outperforms several state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge