Self-Paced Multi-Agent Reinforcement Learning

Paper and Code

May 20, 2022

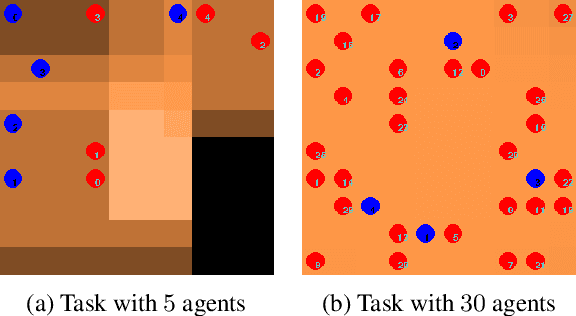

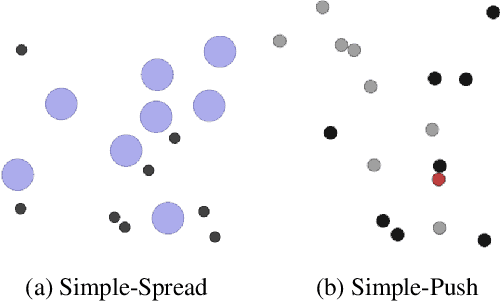

Curriculum reinforcement learning (CRL) aims to speed up learning of a task by changing gradually the difficulty of the task from easy to hard through control of factors such as initial state or environment dynamics. While automating CRL is well studied in the single-agent setting, in multi-agent reinforcement learning (MARL) an open question is whether control of the number of agents with other factors in a principled manner is beneficial, prior approaches typically relying on hand-crafted heuristics. In addition, how the tasks evolve as the number of agents changes remains understudied, which is critical for scaling to more challenging tasks. We introduce self-paced MARL (SPMARL) that enables optimizing the number of agents with other environment factors in a principled way, and, show that usual assumptions such as that fewer agents make the task always easier are not generally valid. The curriculum induced by SPMARL reveals the evolution of tasks w.r.t. number of agents and experiments show that SPMARL improves the performance when the number of agents sufficiently influences task difficulty.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge