Selectivity considered harmful: evaluating the causal impact of class selectivity in DNNs

Paper and Code

Mar 03, 2020

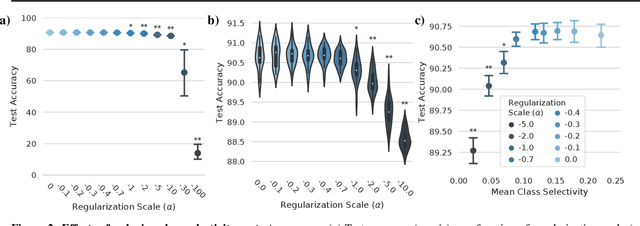

Class selectivity, typically defined as how different a neuron's responses are across different classes of stimuli or data samples, is a common metric used to interpret the function of individual neurons in biological and artificial neural networks. However, it remains an open question whether it is necessary and/or sufficient for deep neural networks (DNNs) to learn class selectivity in individual units. In order to investigate the causal impact of class selectivity on network function, we directly regularize for or against class selectivity. Using this regularizer, we were able to reduce mean class selectivity across units in convolutional neural networks by a factor of 2.5 with no impact on test accuracy, and reduce it nearly to zero with only a small ($\sim$2%) change in test accuracy. In contrast, increasing class selectivity beyond the levels naturally learned during training had rapid and disastrous effects on test accuracy. These results indicate that class selectivity in individual units is neither neither sufficient nor strictly necessary for DNN performance, and more generally encourage caution when focusing on the properties of single units as representative of the mechanisms by which DNNs function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge