Selective Complementary Feature Fusion and Modal Feature Compression Interaction for Brain Tumor Segmentation

Paper and Code

Mar 20, 2025

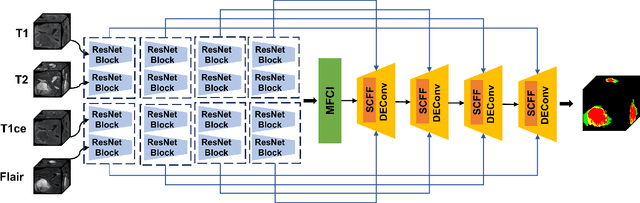

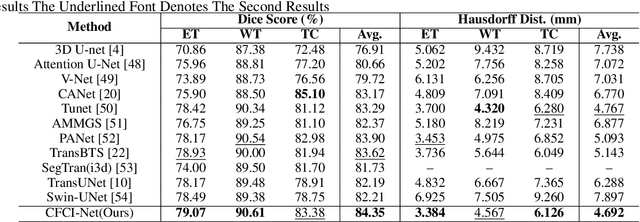

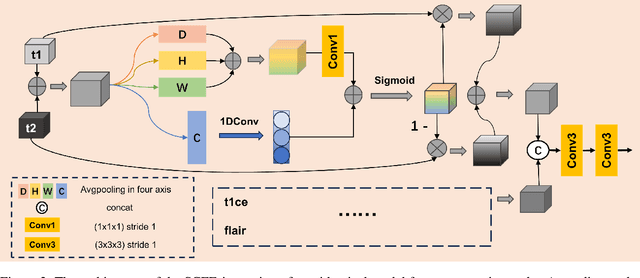

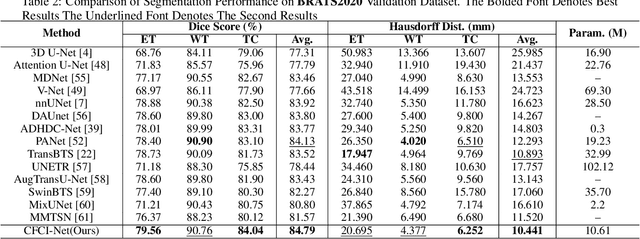

Efficient modal feature fusion strategy is the key to achieve accurate segmentation of brain glioma. However, due to the specificity of different MRI modes, it is difficult to carry out cross-modal fusion with large differences in modal features, resulting in the model ignoring rich feature information. On the other hand, the problem of multi-modal feature redundancy interaction occurs in parallel networks due to the proliferation of feature dimensions, further increase the difficulty of multi-modal feature fusion at the bottom end. In order to solve the above problems, we propose a noval complementary feature compression interaction network (CFCI-Net), which realizes the complementary fusion and compression interaction of multi-modal feature information with an efficient mode fusion strategy. Firstly, we propose a selective complementary feature fusion (SCFF) module, which adaptively fuses rich cross-modal feature information by complementary soft selection weights. Secondly, a modal feature compression interaction (MFCI) transformer is proposed to deal with the multi-mode fusion redundancy problem when the feature dimension surges. The MFCI transformer is composed of modal feature compression (MFC) and modal feature interaction (MFI) to realize redundancy feature compression and multi-mode feature interactive learning. %In MFI, we propose a hierarchical interactive attention mechanism based on multi-head attention. Evaluations on the BraTS2019 and BraTS2020 datasets demonstrate that CFCI-Net achieves superior results compared to state-of-the-art models. Code: https://github.com/CDmm0/CFCI-Net

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge