SEA: State-Exchange Attention for High-Fidelity Physics-Based Transformers

Paper and Code

Oct 20, 2024

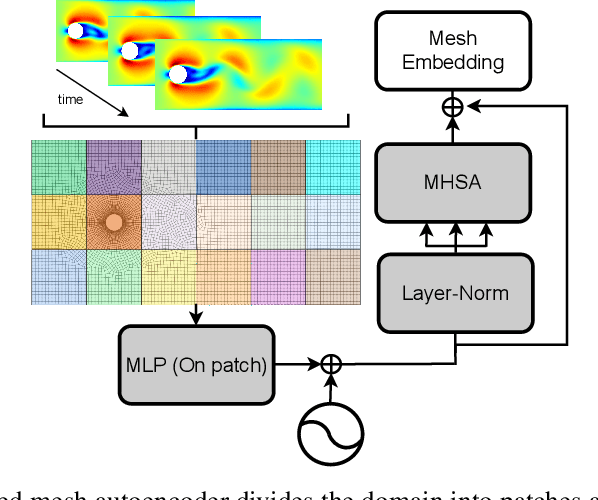

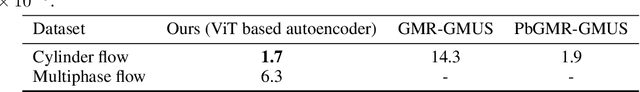

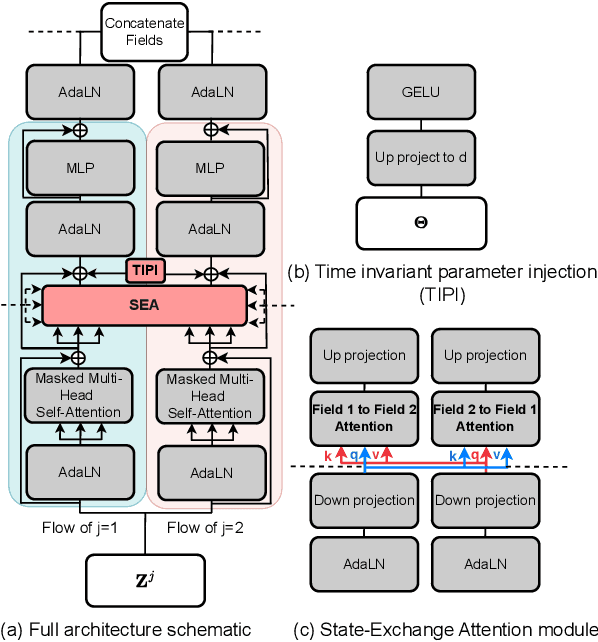

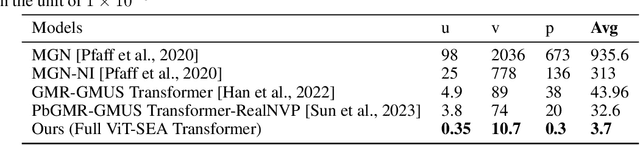

Current approaches using sequential networks have shown promise in estimating field variables for dynamical systems, but they are often limited by high rollout errors. The unresolved issue of rollout error accumulation results in unreliable estimations as the network predicts further into the future, with each step's error compounding and leading to an increase in inaccuracy. Here, we introduce the State-Exchange Attention (SEA) module, a novel transformer-based module enabling information exchange between encoded fields through multi-head cross-attention. The cross-field multidirectional information exchange design enables all state variables in the system to exchange information with one another, capturing physical relationships and symmetries between fields. In addition, we incorporate a ViT-like architecture to generate spatially coherent mesh embeddings, further improving the model's ability to capture spatial dependencies in the data. This enhances the model's ability to represent complex interactions between the field variables, resulting in improved rollout error accumulation. Our results show that the Transformer model integrated with the State-Exchange Attention (SEA) module outperforms competitive baseline models, including the PbGMR-GMUS Transformer-RealNVP and GMR-GMUS Transformer, with a reduction in error of 88\% and 91\%, respectively, achieving state-of-the-art performance. Furthermore, we demonstrate that the SEA module alone can reduce errors by 97\% for state variables that are highly dependent on other states of the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge