SE-MelGAN -- Speaker Agnostic Rapid Speech Enhancement

Paper and Code

Jun 13, 2020

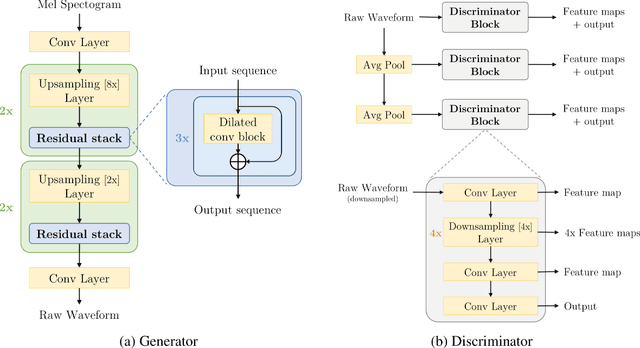

Recent advancement in Generative Adversarial Networks in speech synthesis domain[3],[2] have shown, that it's possible to train GANs [8] in a reliable manner for high quality coherent waveform generation from mel-spectograms. We propose that it is possible to transfer the MelGAN's [3] robustness in learning speech features to speech enhancement and noise reduction domain without any model modification tasks. Our proposed method generalizes over multi-speaker speech dataset and is able to robustly handle unseen background noises during the inference. Also, we show that by increasing the batch size for this particular approach not only yields better speech results, but generalizes over multi-speaker dataset easily and leads to faster convergence. Additionally, it outperforms previous state of the art GAN approach for speech enhancement SEGAN [5] in two domains: 1. quality ; 2. speed. Proposed method runs at more than 100x faster than realtime on GPU and more than 2x faster than real time on CPU without any hardware optimization tasks, right at the speed of MelGAN [3].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge