Scale-Robust Localization Using General Object Landmarks

Paper and Code

May 24, 2018

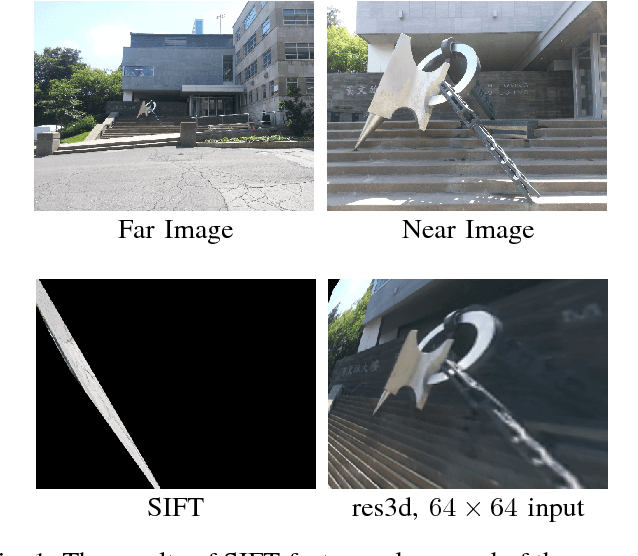

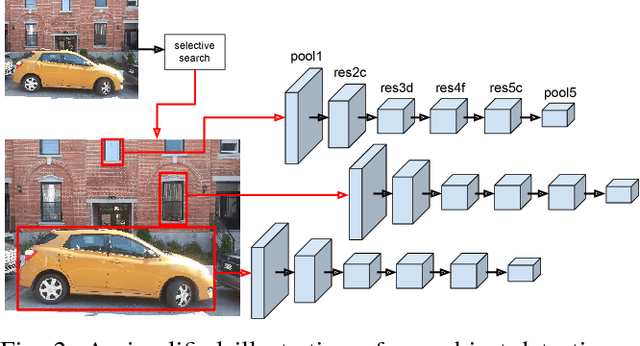

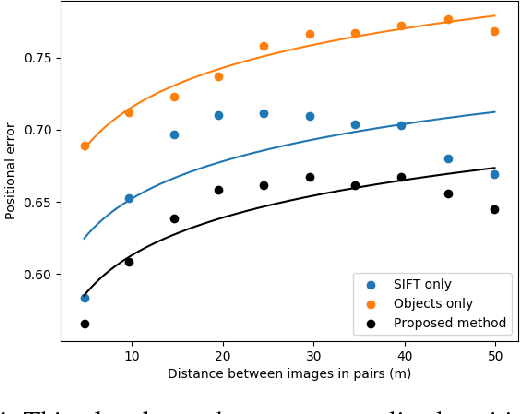

Visual localization under large changes in scale is an important capability in many robotic mapping applications, such as localizing at low altitudes in maps built at high altitudes, or performing loop closure over long distances. Existing approaches, however, are robust only up to about a 3x difference in scale between map and query images. We propose a novel combination of deep-learning-based object features and state-of-the-art SIFT point-features that yields improved robustness to scale change. This technique is training-free and class-agnostic, and in principle can be deployed in any environment out-of-the-box. We evaluate the proposed technique on the KITTI Odometry benchmark and on a novel dataset of outdoor images exhibiting changes in visual scale of $7\times$ and greater, which we have released to the public. Our technique consistently outperforms localization using either SIFT features or the proposed object features alone, achieving both greater accuracy and much lower failure rates under large changes in scale.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge