sat2pc: Estimating Point Cloud of Building Roofs from 2D Satellite Images

Paper and Code

May 25, 2022

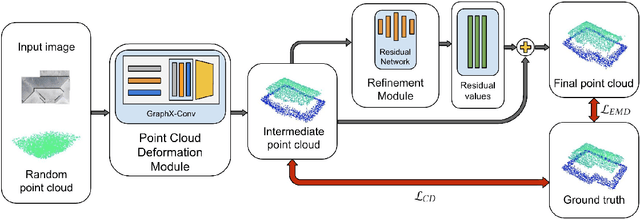

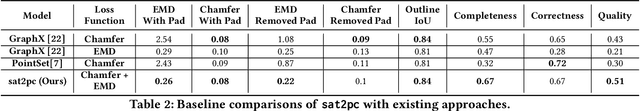

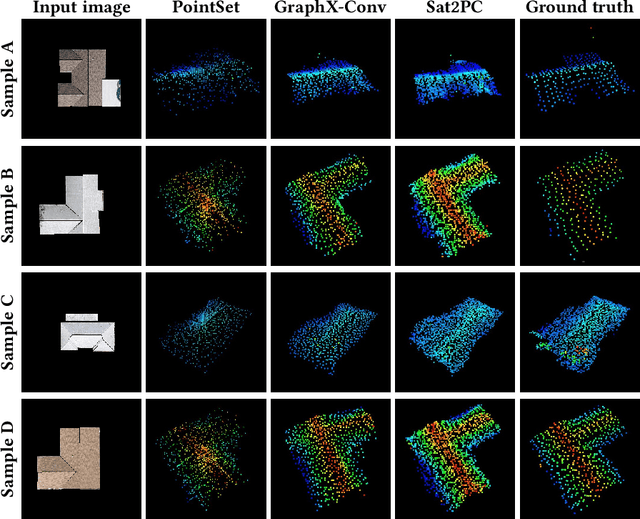

Three-dimensional (3D) urban models have gained interest because of their applications in many use-cases such as urban planning and virtual reality. However, generating these 3D representations requires LiDAR data, which are not always readily available. Thus, the applicability of automated 3D model generation algorithms is limited to a few locations. In this paper, we propose sat2pc, a deep learning architecture that predicts the point cloud of a building roof from a single 2D satellite image. Our architecture combines Chamfer distance and EMD loss, resulting in better 2D to 3D performance. We extensively evaluate our model and perform ablation studies on a building roof dataset. Our results show that sat2pc was able to outperform existing baselines by at least 18.6%. Further, we show that the predicted point cloud captures more detail and geometric characteristics than other baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge