SAFFRON and LORD Ensure Online Control of the False Discovery Rate Under Positive Dependence

Paper and Code

Oct 27, 2021

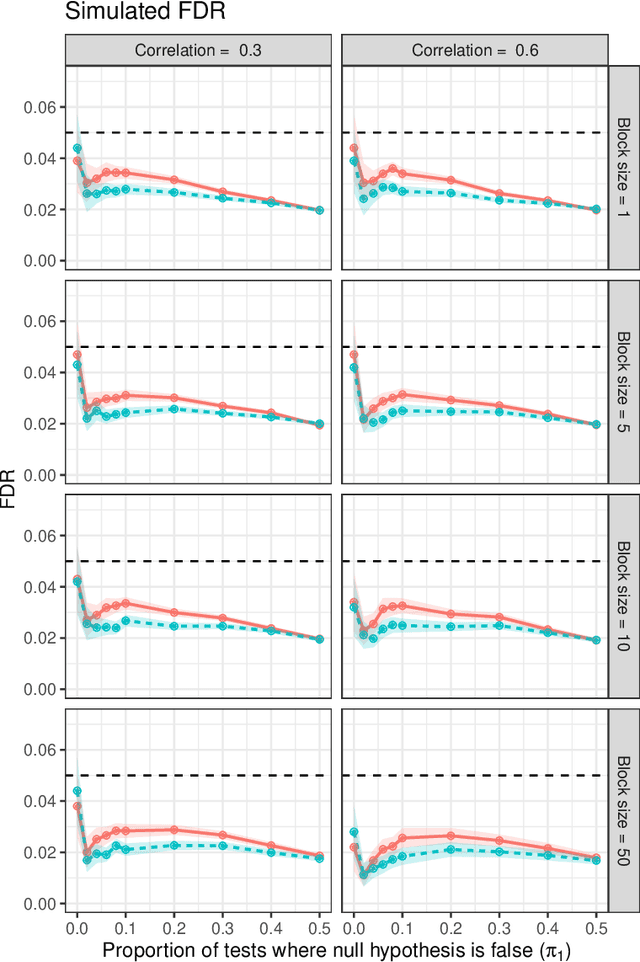

Online testing procedures assume that hypotheses are observed in sequence, and allow the significance thresholds for upcoming tests to depend on the test statistics observed so far. Some of the most popular online methods include alpha investing, LORD++ (hereafter, LORD), and SAFFRON. These three methods have been shown to provide online control of the "modified" false discovery rate (mFDR). However, to our knowledge, they have only been shown to control the traditional false discovery rate (FDR) under an independence condition on the test statistics. Our work bolsters these results by showing that SAFFRON and LORD additionally ensure online control of the FDR under nonnegative dependence. Because alpha investing can be recovered as a special case of the SAFFRON framework, the same result applies to this method as well. Our result also allows for certain forms of adaptive stopping times, for example, stopping after a certain number of rejections have been observed. For convenience, we also provide simplified versions of the LORD and SAFFRON algorithms based on geometric alpha allocations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge