Safe Multi-Agent Reinforcement Learning with Bilevel Optimization in Autonomous Driving

Paper and Code

May 28, 2024

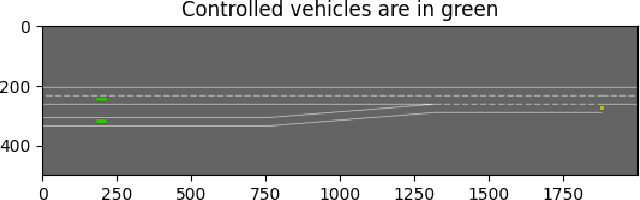

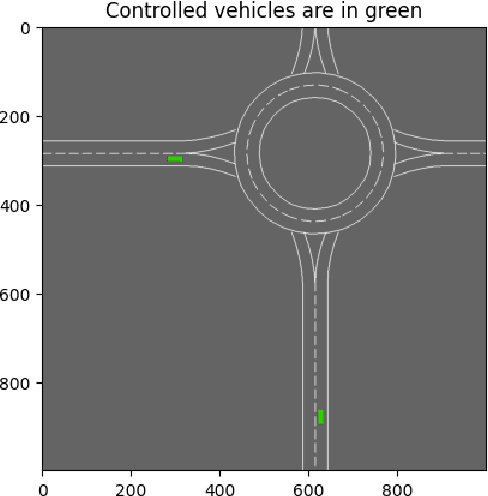

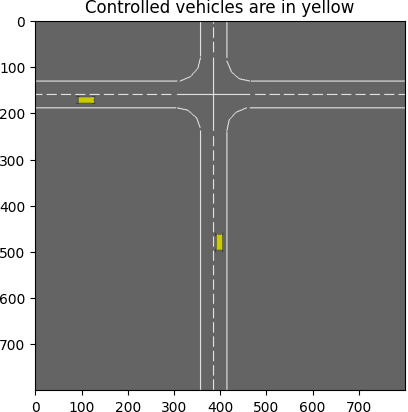

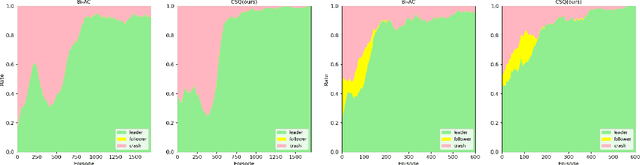

Ensuring safety in MARL, particularly when deploying it in real-world applications such as autonomous driving, emerges as a critical challenge. To address this challenge, traditional safe MARL methods extend MARL approaches to incorporate safety considerations, aiming to minimize safety risk values. However, these safe MARL algorithms often fail to model other agents and lack convergence guarantees, particularly in dynamically complex environments. In this study, we propose a safe MARL method grounded in a Stackelberg model with bi-level optimization, for which convergence analysis is provided. Derived from our theoretical analysis, we develop two practical algorithms, namely Constrained Stackelberg Q-learning (CSQ) and Constrained Stackelberg Multi-Agent Deep Deterministic Policy Gradient (CS-MADDPG), designed to facilitate MARL decision-making in autonomous driving applications. To evaluate the effectiveness of our algorithms, we developed a safe MARL autonomous driving benchmark and conducted experiments on challenging autonomous driving scenarios, such as merges, roundabouts, intersections, and racetracks. The experimental results indicate that our algorithms, CSQ and CS-MADDPG, outperform several strong MARL baselines, such as Bi-AC, MACPO, and MAPPO-L, regarding reward and safety performance. The demos and source code are available at {https://github.com/SafeRL-Lab/Safe-MARL-in-Autonomous-Driving.git}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge