SA vs SAA for population Wasserstein barycenter calculation

Paper and Code

Jan 30, 2020

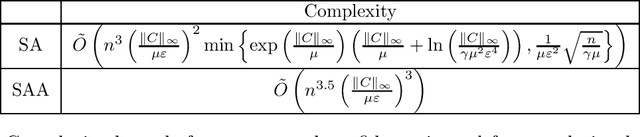

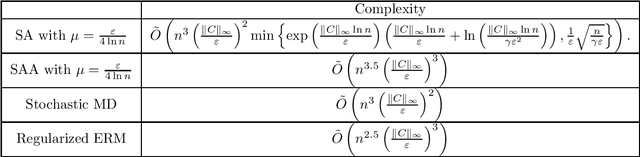

In Machine Learning and Optimization community there are two main approaches for convex risk minimization problem. The first approach is Stochastic Averaging (SA) (online) and the second one is Stochastic Average Approximation (SAA) (Monte Carlo, Empirical Risk Minimization, offline) with proper regularization in non-strongly convex case. At the moment, it is known that both approaches are on average equivalent (up to a logarithmic factor) in terms of oracle complexity (required number of stochastic gradient evaluations). What is the situation with total complexity? The answer depends on specific problem. However, starting from work [Nemirovski et al. (2009)] it was generally accepted that SA is better than SAA. Nevertheless, in case of large-scale problems SA may ran out of memory problems since storing all data on one machine and organizing online access to it can be impossible without communications with other machines. SAA in contradistinction to SA allows parallel/distributed calculations. In this paper we show that SAA may outperform SA in the problem of calculating an estimation for population ({\mu}-entropy regularized) Wasserstein barycenter even for non-parallel (non-decenralized) set up.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge