Robust Probabilistic Inference in Distributed Systems

Paper and Code

Jul 11, 2012

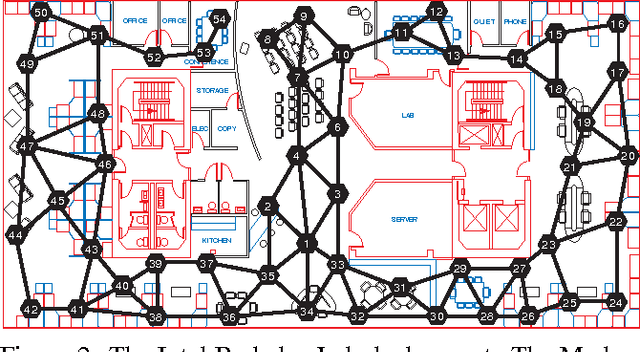

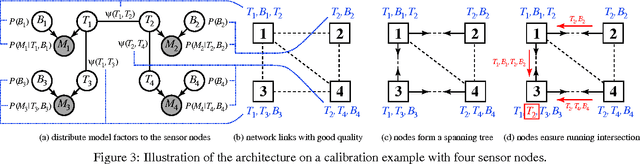

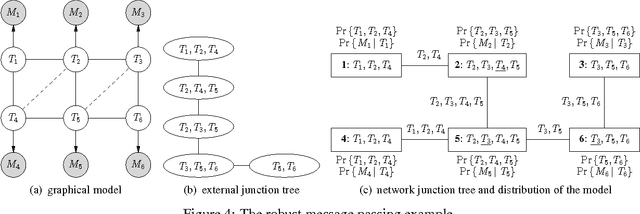

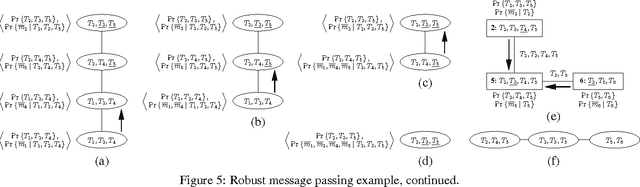

Probabilistic inference problems arise naturally in distributed systems such as sensor networks and teams of mobile robots. Inference algorithms that use message passing are a natural fit for distributed systems, but they must be robust to the failure situations that arise in real-world settings, such as unreliable communication and node failures. Unfortunately, the popular sum-product algorithm can yield very poor estimates in these settings because the nodes' beliefs before convergence can be arbitrarily different from the correct posteriors. In this paper, we present a new message passing algorithm for probabilistic inference which provides several crucial guarantees that the standard sum-product algorithm does not. Not only does it converge to the correct posteriors, but it is also guaranteed to yield a principled approximation at any point before convergence. In addition, the computational complexity of the message passing updates depends only upon the model, and is dependent of the network topology of the distributed system. We demonstrate the approach with detailed experimental results on a distributed sensor calibration task using data from an actual sensor network deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge