Robust Longitudinal Control for Vehicular Autonomous Platoons Using Deep Reinforcement Learning

Paper and Code

May 31, 2022

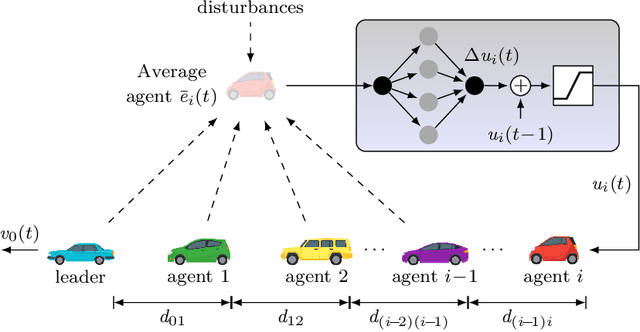

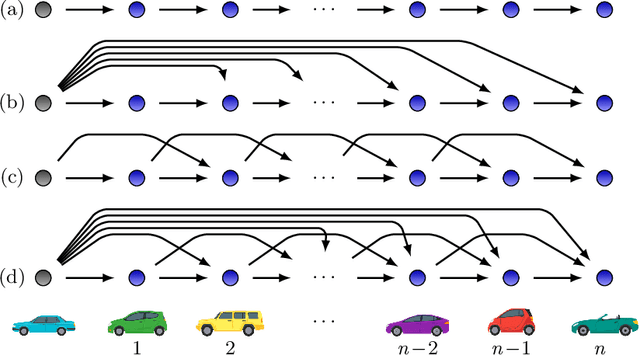

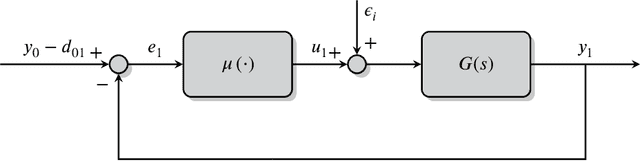

In the last few years, researchers have applied machine learning strategies in the context of vehicular platoons to increase the safety and efficiency of cooperative transportation. Reinforcement Learning methods have been employed in the longitudinal spacing control of Cooperative Adaptive Cruise Control systems, but to date, none of those studies have addressed problems of disturbance rejection in such scenarios. Characteristics such as uncertain parameters in the model and external interferences may prevent agents from reaching null-spacing errors when traveling at cruising speed. On the other hand, complex communication topologies lead to specific training processes that can not be generalized to other contexts, demanding re-training every time the configuration changes. Therefore, in this paper, we propose an approach to generalize the training process of a vehicular platoon, such that the acceleration command of each agent becomes independent of the network topology. Also, we have modeled the acceleration input as a term with integral action, such that the Convolutional Neural Network is capable of learning corrective actions when the states are disturbed by unknown effects. We illustrate the effectiveness of our proposal with experiments using different network topologies, uncertain parameters, and external forces. Comparative analyses, in terms of the steady-state error and overshoot response, were conducted against the state-of-the-art literature. The findings offer new insights concerning generalization and robustness of using Reinforcement Learning in the control of autonomous platoons.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge