Robust Feature Disentanglement in Imaging Data via Joint Invariant Variational Autoencoders: from Cards to Atoms

Paper and Code

Apr 20, 2021

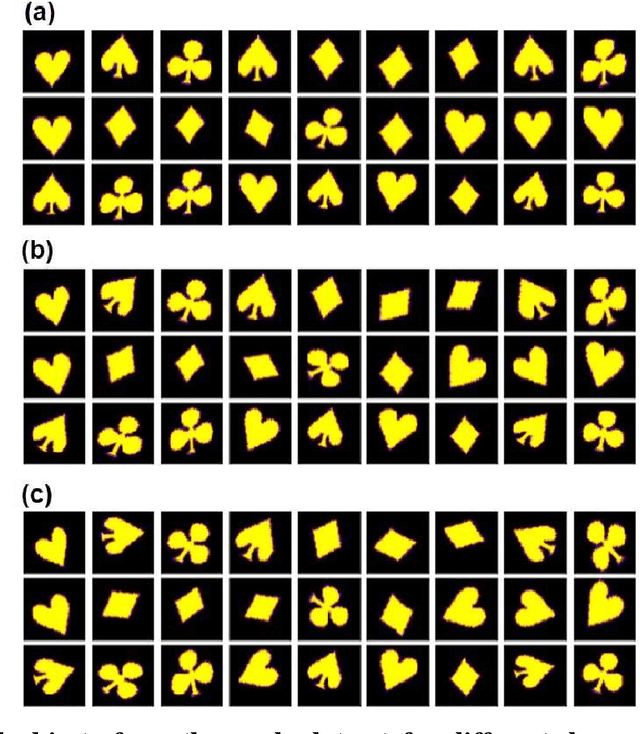

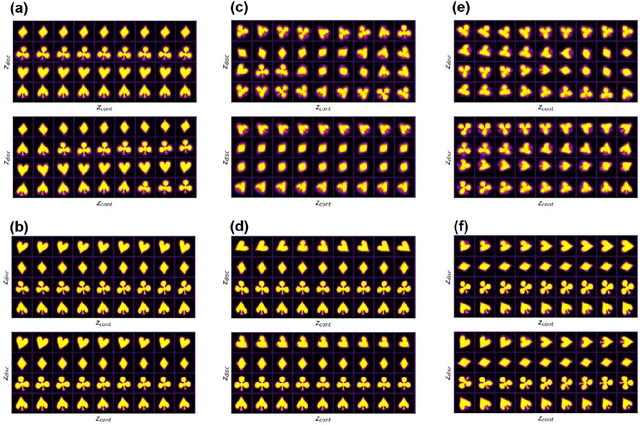

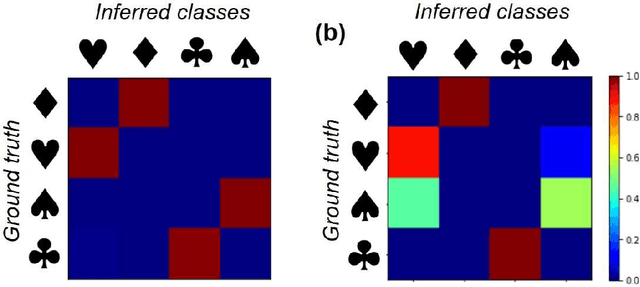

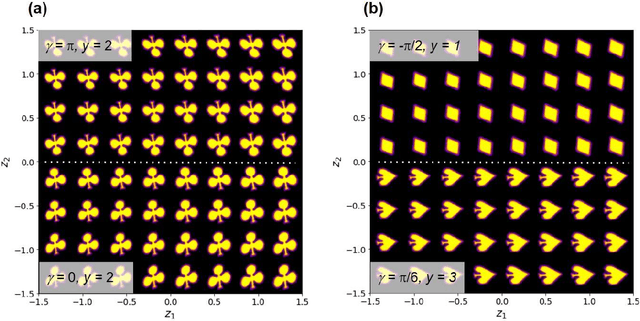

Recent advances in imaging from celestial objects in astronomy visualized via optical and radio telescopes to atoms and molecules resolved via electron and probe microscopes are generating immense volumes of imaging data, containing information about the structure of the universe from atomic to astronomic levels. The classical deep convolutional neural network architectures traditionally perform poorly on the data sets having a significant orientational disorder, that is, having multiple copies of the same or similar object in arbitrary orientation in the image plane. Similarly, while clustering methods are well suited for classification into discrete classes and manifold learning and variational autoencoders methods can disentangle representations of the data, the combined problem is ill-suited to a classical non-supervised learning paradigm. Here we introduce a joint rotationally (and translationally) invariant variational autoencoder (j-trVAE) that is ideally suited to the solution of such a problem. The performance of this method is validated on several synthetic data sets and extended to high-resolution imaging data of electron and scanning probe microscopy. We show that latent space behaviors directly comport to the known physics of ferroelectric materials and quantum systems. We further note that the engineering of the latent space structure via imposed topological structure or directed graph relationship allows for applications in topological discovery and causal physical learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge