Robust Class-Conditional Distribution Alignment for Partial Domain Adaptation

Paper and Code

Oct 19, 2023

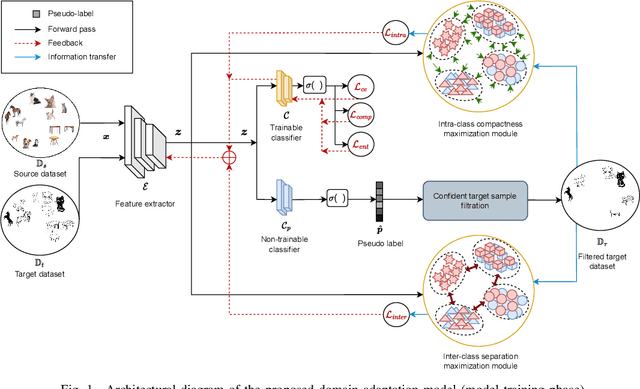

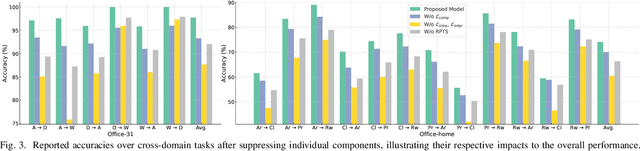

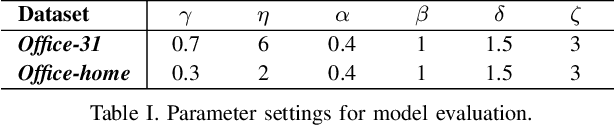

Unwanted samples from private source categories in the learning objective of a partial domain adaptation setup can lead to negative transfer and reduce classification performance. Existing methods, such as re-weighting or aggregating target predictions, are vulnerable to this issue, especially during initial training stages, and do not adequately address class-level feature alignment. Our proposed approach seeks to overcome these limitations by delving deeper than just the first-order moments to derive distinct and compact categorical distributions. We employ objectives that optimize the intra and inter-class distributions in a domain-invariant fashion and design a robust pseudo-labeling for efficient target supervision. Our approach incorporates a complement entropy objective module to reduce classification uncertainty and flatten incorrect category predictions. The experimental findings and ablation analysis of the proposed modules demonstrate the superior performance of our proposed model compared to benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge