Robotic Self-Assessment of Competence

Paper and Code

May 04, 2020

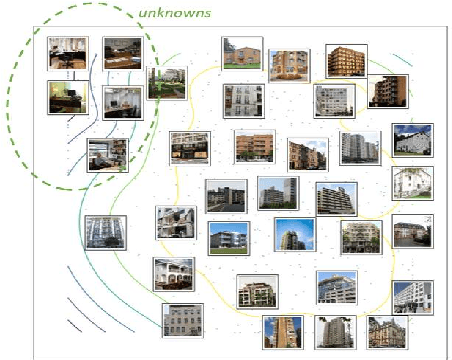

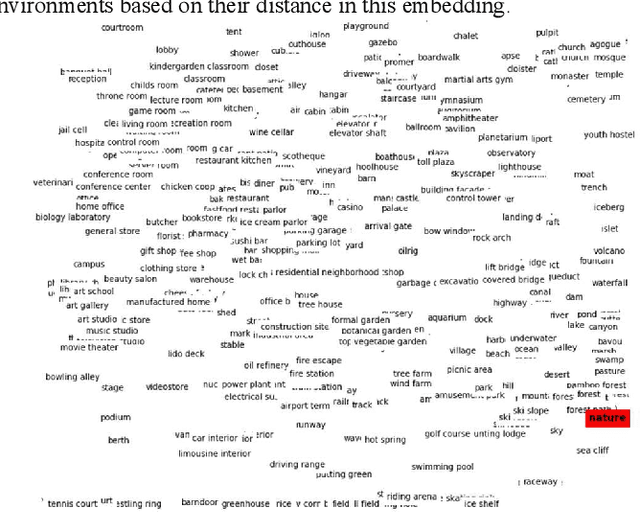

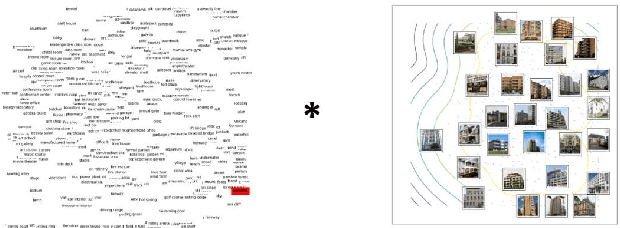

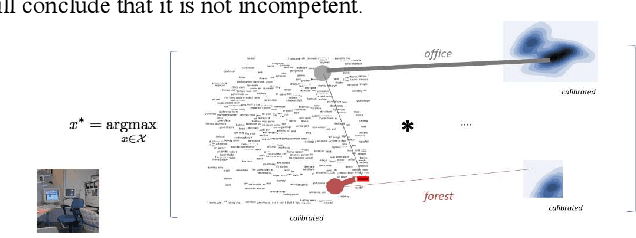

In robotics, one of the main challenges is that the on-board Artificial Intelligence (AI) must deal with different or unexpected environments. Such AI agents may be incompetent there, while the underlying model itself may not be aware of this (e.g., deep learning models are often overly confident). This paper proposes two methods for the online assessment of the competence of the AI model, respectively for situations when nothing is known about competence beforehand, and when there is prior knowledge about competence (in semantic form). The proposed method assesses whether the current environment is known. If not, it asks a human for feedback about its competence. If it knows the environment, it assesses its competence by generalizing from earlier experience. Results on real data show the merit of competence assessment for a robot moving through various environments in which it sometimes is competent and at other times it is not competent. We discuss the role of the human in robot's self-assessment of its competence, and the challenges to acquire complementary information from the human that reinforces the assessments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge