RLStop: A Reinforcement Learning Stopping Method for TAR

Paper and Code

May 03, 2024

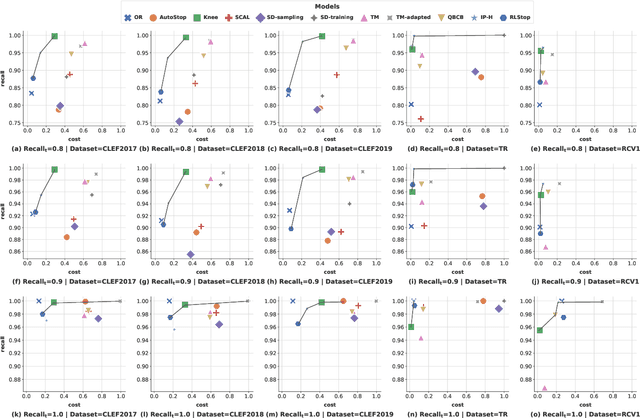

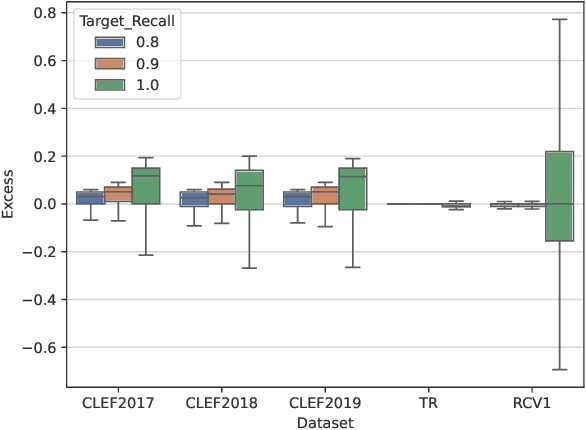

We present RLStop, a novel Technology Assisted Review (TAR) stopping rule based on reinforcement learning that helps minimise the number of documents that need to be manually reviewed within TAR applications. RLStop is trained on example rankings using a reward function to identify the optimal point to stop examining documents. Experiments at a range of target recall levels on multiple benchmark datasets (CLEF e-Health, TREC Total Recall, and Reuters RCV1) demonstrated that RLStop substantially reduces the workload required to screen a document collection for relevance. RLStop outperforms a wide range of alternative approaches, achieving performance close to the maximum possible for the task under some circumstances.

* Accepted at SIGIR 2024

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge