RGB-D Neural Radiance Fields: Local Sampling for Faster Training

Paper and Code

Mar 26, 2022

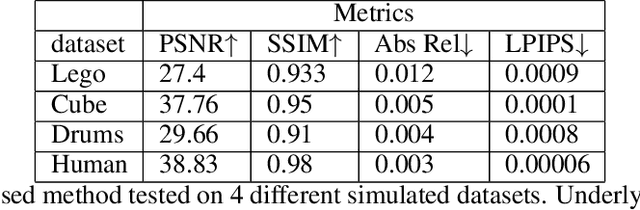

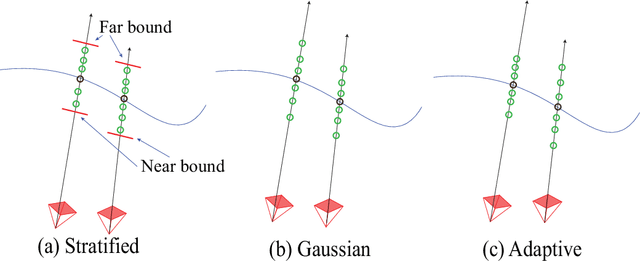

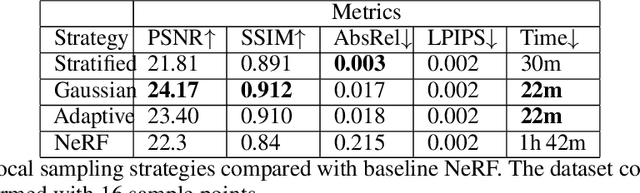

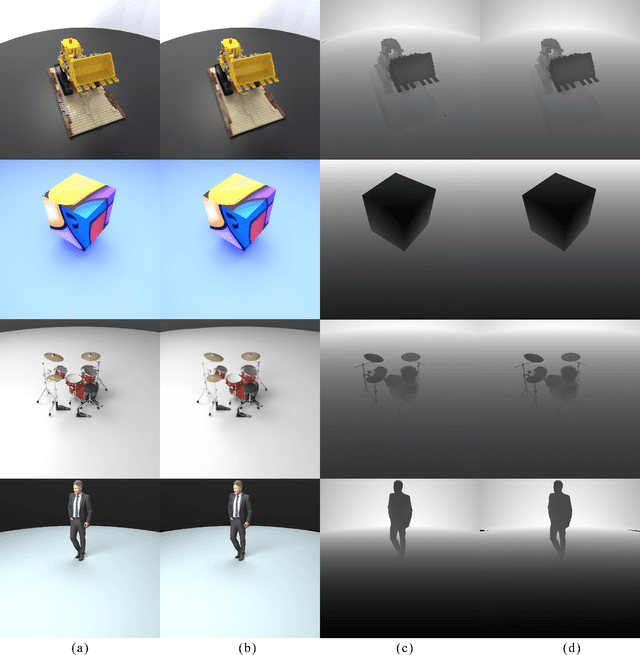

Learning a 3D representation of a scene has been a challenging problem for decades in computer vision. Recent advances in implicit neural representation from images using neural radiance fields(NeRF) have shown promising results. Some of the limitations of previous NeRF based methods include longer training time, and inaccurate underlying geometry. The proposed method takes advantage of RGB-D data to reduce training time by leveraging depth sensing to improve local sampling. This paper proposes a depth-guided local sampling strategy and a smaller neural network architecture to achieve faster training time without compromising quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge