RevUp: Revise and Update Information Bottleneck for Event Representation

Paper and Code

May 24, 2022

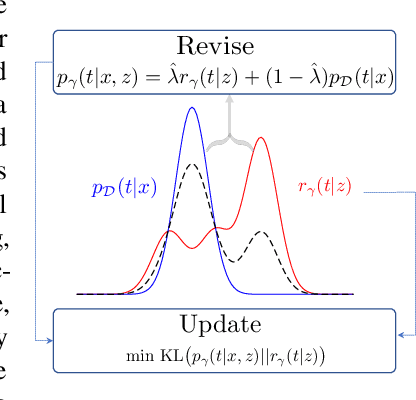

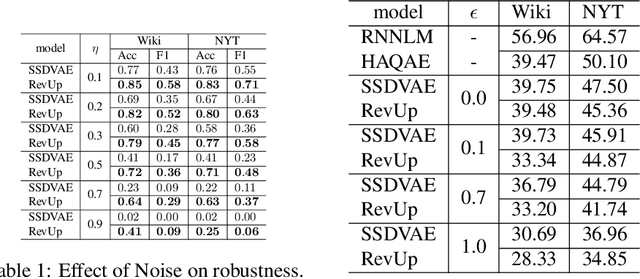

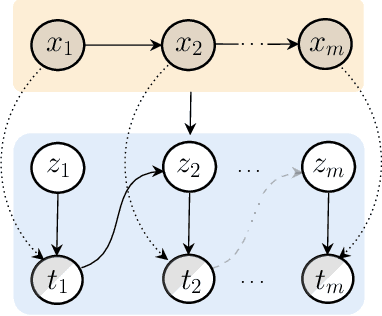

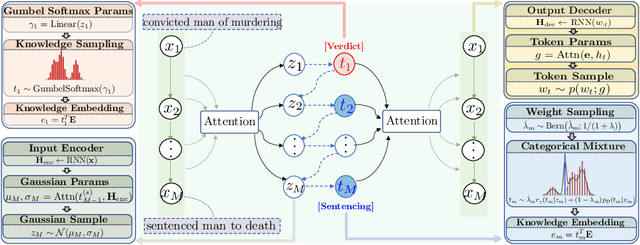

In machine learning, latent variables play a key role to capture the underlying structure of data, but they are often unsupervised. When we have side knowledge that already has high-level information about the input data, we can use that source to guide latent variables and capture the available background information in a process called "parameter injection." In that regard, we propose a semi-supervised information bottleneck-based model that enables the use of side knowledge, even if it is noisy and imperfect, to direct the learning of discrete latent variables. Fundamentally, we introduce an auxiliary continuous latent variable as a way to reparameterize the model's discrete variables with a light-weight hierarchical structure. With this reparameterization, the model's discrete latent variables are learned to minimize the mutual information between the observed data and optional side knowledge that is not already captured by the new, auxiliary variables. We theoretically show that our approach generalizes an existing method of parameter injection, and perform an empirical case study of our approach on language-based event modeling. We corroborate our theoretical results with strong empirical experiments, showing that the proposed method outperforms previous proposed approaches on multiple datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge