Reviving Threshold-Moving: a Simple Plug-in Bagging Ensemble for Binary and Multiclass Imbalanced Data

Paper and Code

Jun 20, 2017

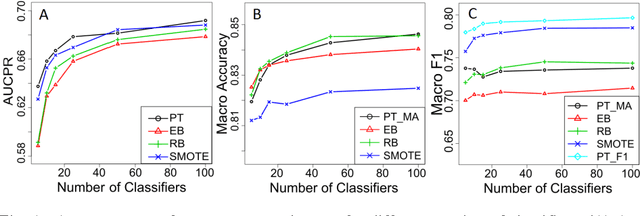

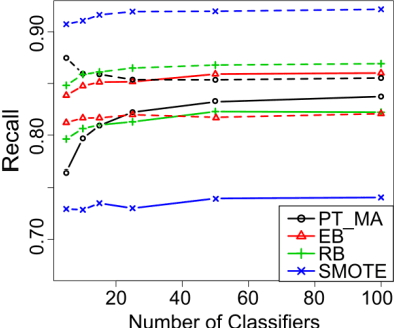

Class imbalance presents a major hurdle in the application of data mining methods. A common practice to deal with it is to create ensembles of classifiers that learn from resampled balanced data. For example, bagged decision trees combined with random undersampling (RUS) or the synthetic minority oversampling technique (SMOTE). However, most of the resampling methods entail asymmetric changes to the examples of different classes, which in turn can introduce its own biases in the model. Furthermore, those methods require a performance measure to be specified a priori before learning. An alternative is to use a so-called threshold-moving method that a posteriori changes the decision threshold of a model to counteract the imbalance, thus has a potential to adapt to the performance measure of interest. Surprisingly, little attention has been paid to the potential of combining bagging ensemble with threshold-moving. In this paper, we present probability thresholding bagging (PT-bagging), a versatile plug-in method that fills this gap. Contrary to usual rebalancing practice, our method preserves the natural class distribution of the data resulting in well calibrated posterior probabilities. We also extend the proposed method to handle multiclass data. The method is validated on binary and multiclass benchmark data sets. We perform analyses that provide insights into the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge