Revisiting Heterophily in Graph Convolution Networks by Learning Representations Across Topological and Feature Spaces

Paper and Code

Nov 02, 2022

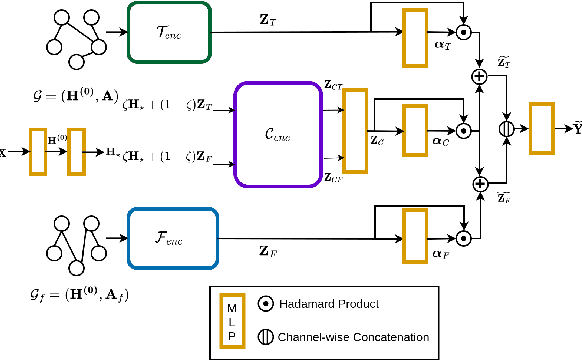

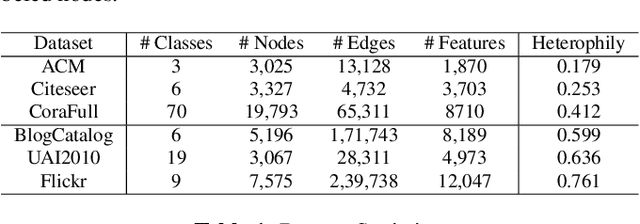

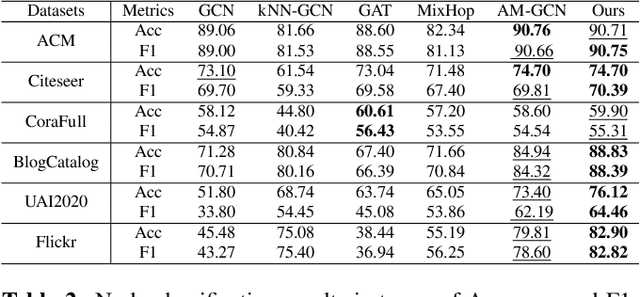

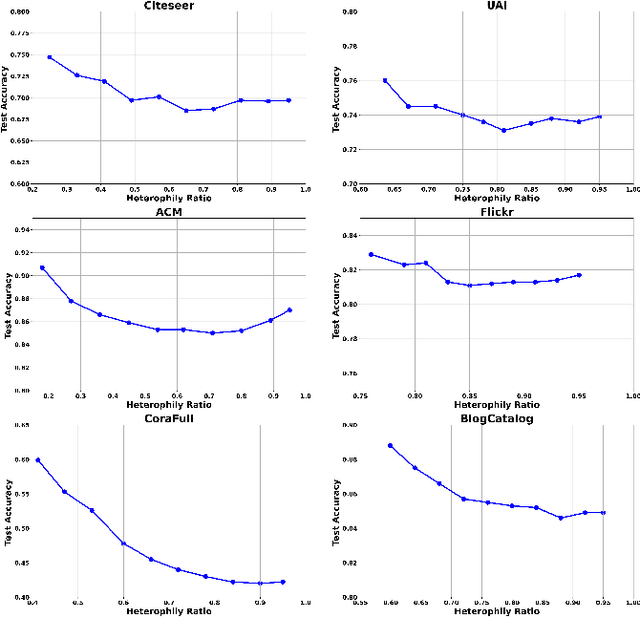

Graph convolution networks (GCNs) have been enormously successful in learning representations over several graph-based machine learning tasks. Specific to learning rich node representations, most of the methods have solely relied on the homophily assumption and have shown limited performance on the heterophilous graphs. While several methods have been developed with new architectures to address heterophily, we argue that by learning graph representations across two spaces i.e., topology and feature space GCNs can address heterophily. In this work, we experimentally demonstrate the performance of the proposed GCN framework over semi-supervised node classification task on both homophilous and heterophilous graph benchmarks by learning and combining representations across the topological and the feature spaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge