Retrieval-Augmented Diffusion Models

Paper and Code

Apr 26, 2022

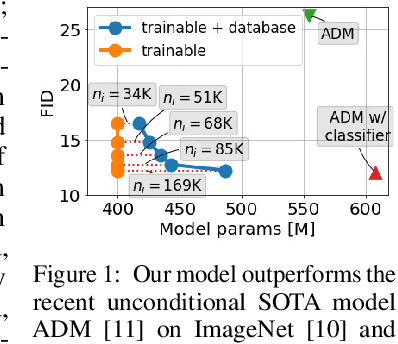

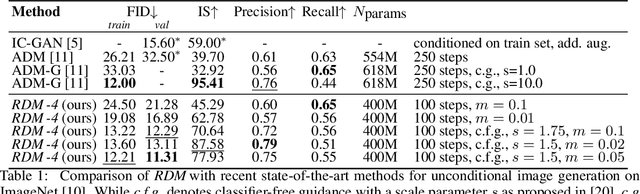

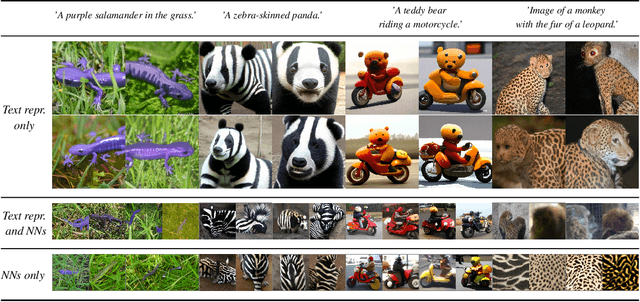

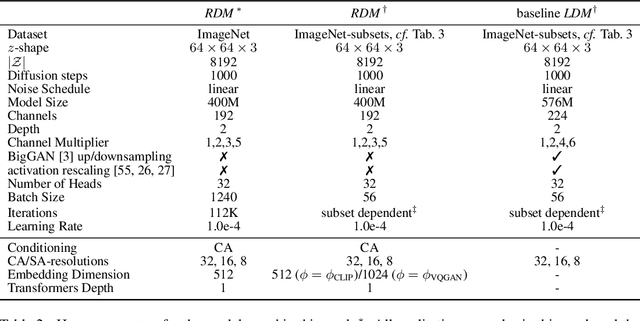

Generative image synthesis with diffusion models has recently achieved excellent visual quality in several tasks such as text-based or class-conditional image synthesis. Much of this success is due to a dramatic increase in the computational capacity invested in training these models. This work presents an alternative approach: inspired by its successful application in natural language processing, we propose to complement the diffusion model with a retrieval-based approach and to introduce an explicit memory in the form of an external database. During training, our diffusion model is trained with similar visual features retrieved via CLIP and from the neighborhood of each training instance. By leveraging CLIP's joint image-text embedding space, our model achieves highly competitive performance on tasks for which it has not been explicitly trained, such as class-conditional or text-image synthesis, and can be conditioned on both text and image embeddings. Moreover, we can apply our approach to unconditional generation, where it achieves state-of-the-art performance. Our approach incurs low computational and memory overheads and is easy to implement. We discuss its relationship to concurrent work and will publish code and pretrained models soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge