Rethinking Intersection Over Union for Small Object Detection in Few-Shot Regime

Paper and Code

Jul 17, 2023

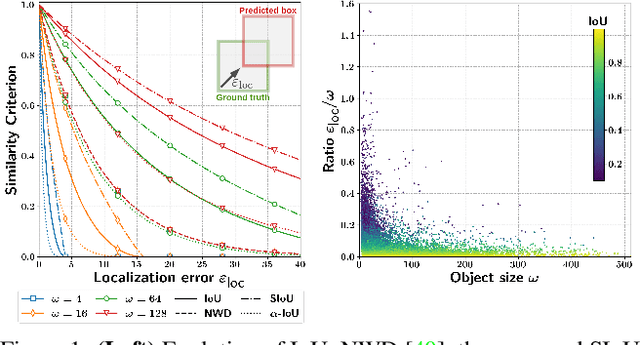

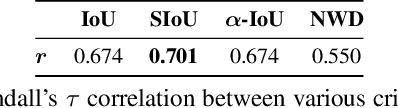

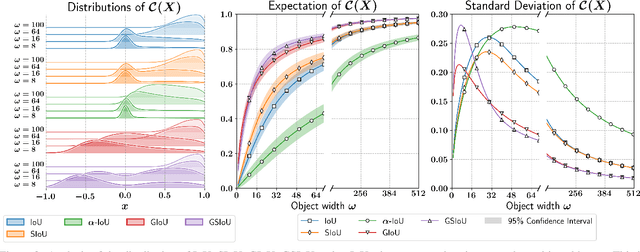

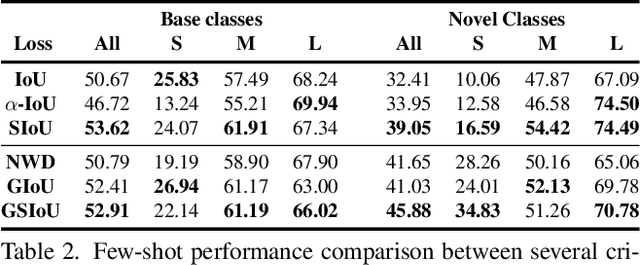

In Few-Shot Object Detection (FSOD), detecting small objects is extremely difficult. The limited supervision cripples the localization capabilities of the models and a few pixels shift can dramatically reduce the Intersection over Union (IoU) between the ground truth and predicted boxes for small objects. To this end, we propose Scale-adaptive Intersection over Union (SIoU), a novel box similarity measure. SIoU changes with the objects' size, it is more lenient with small object shifts. We conducted a user study and SIoU better aligns than IoU with human judgment. Employing SIoU as an evaluation criterion helps to build more user-oriented models. SIoU can also be used as a loss function to prioritize small objects during training, outperforming existing loss functions. SIoU improves small object detection in the non-few-shot regime, but this setting is unrealistic in the industry as annotated detection datasets are often too expensive to acquire. Hence, our experiments mainly focus on the few-shot regime to demonstrate the superiority and versatility of SIoU loss. SIoU improves significantly FSOD performance on small objects in both natural (Pascal VOC and COCO datasets) and aerial images (DOTA and DIOR). In aerial imagery, small objects are critical and SIoU loss achieves new state-of-the-art FSOD on DOTA and DIOR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge