Rethinking Drone-Based Search and Rescue with Aerial Person Detection

Paper and Code

Nov 17, 2021

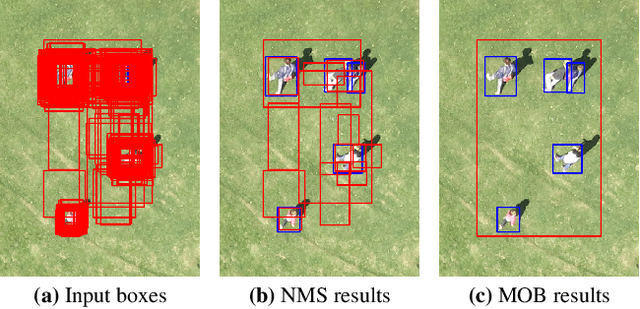

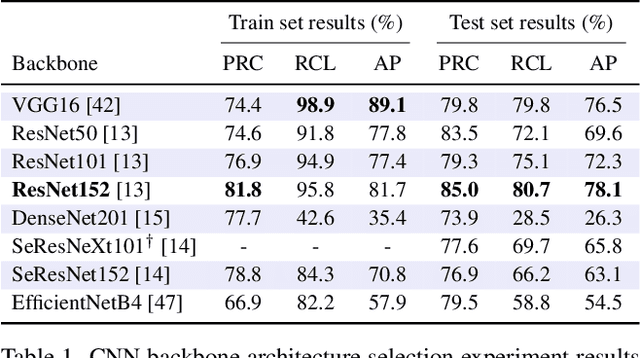

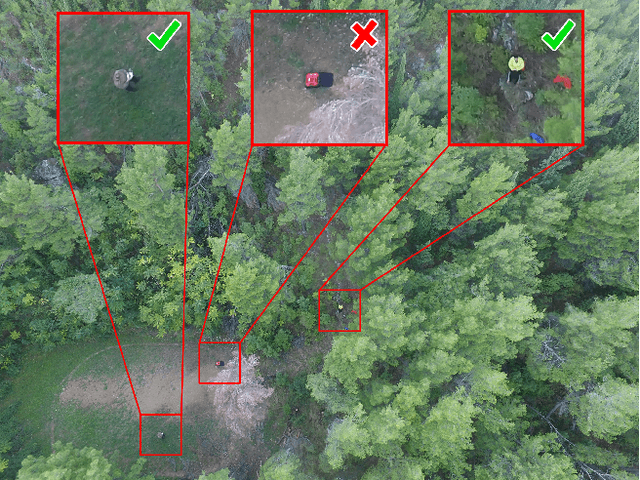

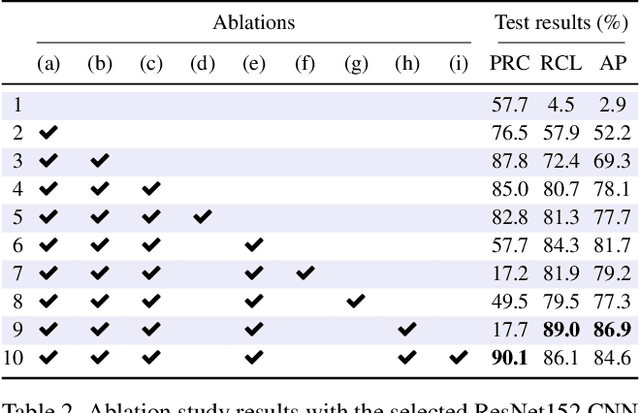

The visual inspection of aerial drone footage is an integral part of land search and rescue (SAR) operations today. Since this inspection is a slow, tedious and error-prone job for humans, we propose a novel deep learning algorithm to automate this aerial person detection (APD) task. We experiment with model architecture selection, online data augmentation, transfer learning, image tiling and several other techniques to improve the test performance of our method. We present the novel Aerial Inspection RetinaNet (AIR) algorithm as the combination of these contributions. The AIR detector demonstrates state-of-the-art performance on a commonly used SAR test data set in terms of both precision (~21 percentage point increase) and speed. In addition, we provide a new formal definition for the APD problem in SAR missions. That is, we propose a novel evaluation scheme that ranks detectors in terms of real-world SAR localization requirements. Finally, we propose a novel postprocessing method for robust, approximate object localization: the merging of overlapping bounding boxes (MOB) algorithm. This final processing stage used in the AIR detector significantly improves its performance and usability in the face of real-world aerial SAR missions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge