Reservoir Computing and Extreme Learning Machines using Pairs of Cellular Automata Rules

Paper and Code

Mar 16, 2017

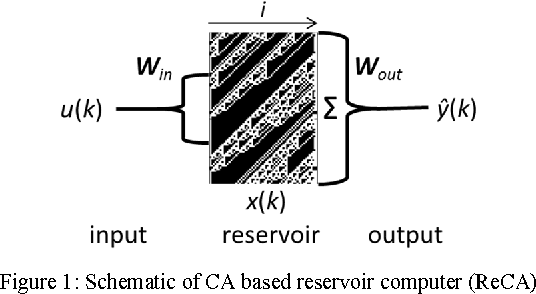

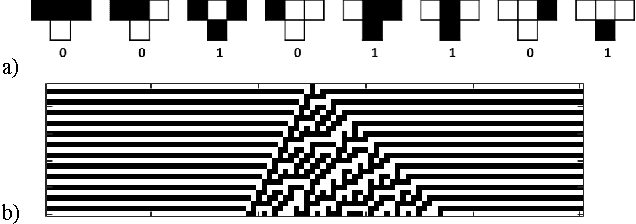

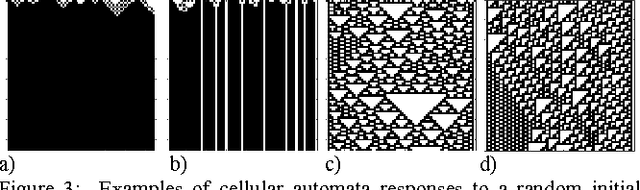

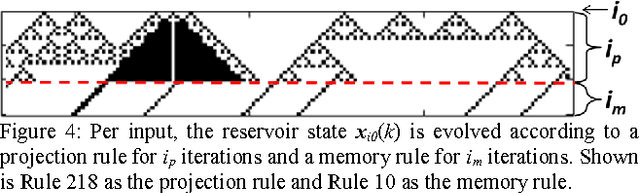

A framework for implementing reservoir computing (RC) and extreme learning machines (ELMs), two types of artificial neural networks, based on 1D elementary Cellular Automata (CA) is presented, in which two separate CA rules explicitly implement the minimum computational requirements of the reservoir layer: hyperdimensional projection and short-term memory. CAs are cell-based state machines, which evolve in time in accordance with local rules based on a cells current state and those of its neighbors. Notably, simple single cell shift rules as the memory rule in a fixed edge CA afforded reasonable success in conjunction with a variety of projection rules, potentially significantly reducing the optimal solution search space. Optimal iteration counts for the CA rule pairs can be estimated for some tasks based upon the category of the projection rule. Initial results support future hardware realization, where CAs potentially afford orders of magnitude reduction in size, weight, and power (SWaP) requirements compared with floating point RC implementations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge