RES-SE-NET: Boosting Performance of Resnets by Enhancing Bridge-connections

Paper and Code

Feb 16, 2019

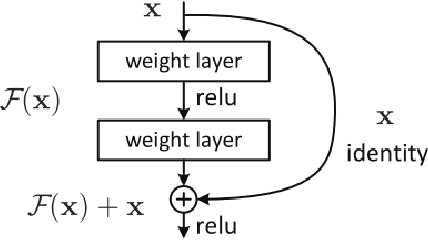

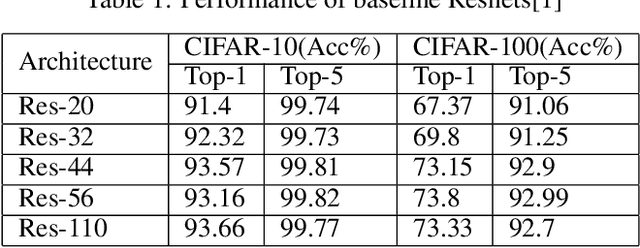

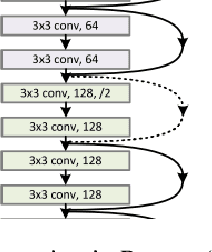

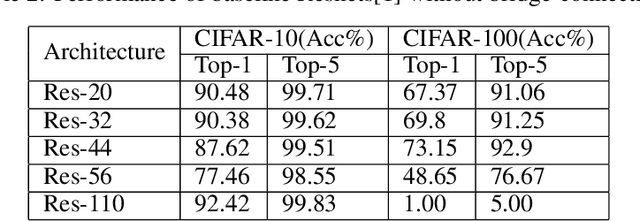

One of the ways to train deep neural networks effectively is to use residual connections. Residual connections can be classified as being either identity connections or bridge-connections with a reshaping convolution. Empirical observations on CIFAR-10 and CIFAR-100 datasets using a baseline Resnet model, with bridge-connections removed, have shown a significant reduction in accuracy. This reduction is due to lack of contribution, in the form of feature maps, by the bridge-connections. Hence bridge-connections are vital for Resnet. However, all feature maps in the bridge-connections are considered to be equally important. In this work, an upgraded architecture "Res-SE-Net" is proposed to further strengthen the contribution from the bridge-connections by quantifying the importance of each feature map and weighting them accordingly using Squeeze-and-Excitation (SE) block. It is demonstrated that Res-SE-Net generalizes much better than Resnet and SE-Resnet on the benchmark CIFAR-10 and CIFAR-100 datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge