Relating Misfit to Gain in Weak-to-Strong Generalization Beyond the Squared Loss

Paper and Code

Jan 31, 2025

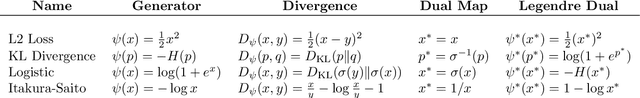

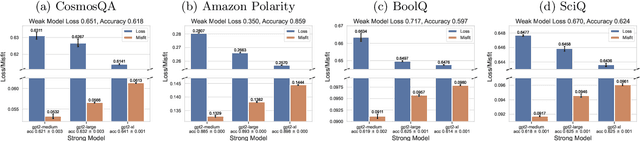

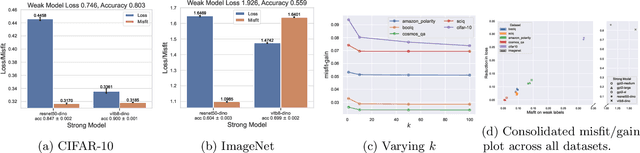

The paradigm of weak-to-strong generalization constitutes the training of a strong AI model on data labeled by a weak AI model, with the goal that the strong model nevertheless outperforms its weak supervisor on the target task of interest. For the setting of real-valued regression with the squared loss, recent work quantitatively characterizes the gain in performance of the strong model over the weak model in terms of the misfit between the strong and weak model. We generalize such a characterization to learning tasks whose loss functions correspond to arbitrary Bregman divergences when the strong class is convex. This extends the misfit-based characterization of performance gain in weak-to-strong generalization to classification tasks, as the cross-entropy loss can be expressed in terms of a Bregman divergence. In most practical scenarios, however, the strong model class may not be convex. We therefore weaken this assumption and study weak-to-strong generalization for convex combinations of $k$ strong models in the strong class, in the concrete setting of classification. This allows us to obtain a similar misfit-based characterization of performance gain, upto an additional error term that vanishes as $k$ gets large. Our theoretical findings are supported by thorough experiments on synthetic as well as real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge