Relating CNNs with brain: Challenges and findings

Paper and Code

Aug 22, 2021

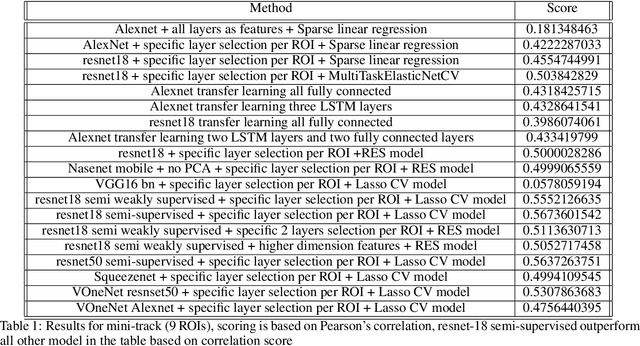

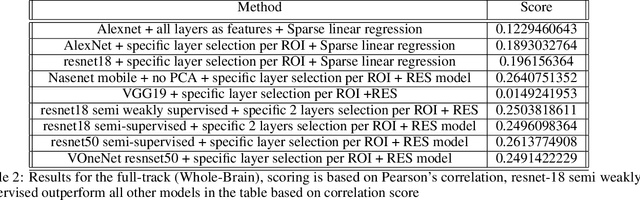

Conventional neural network models (CNN), loosely inspired by the primate visual system, have been shown to predict neural responses in the visual cortex. However, the relationship between CNNs and the visual system is incomplete due to many reasons. On one hand state of the art CNN architecture is very complex, yet can be fooled by imperceptibly small, explicitly crafted perturbations which makes it hard difficult to map layers of the network with the visual system and to understand what they are doing. On the other hand, we don't know the exact mapping between feature space of the CNNs and the space domain of the visual cortex, which makes it hard to accurately predict neural responses. In this paper we review the challenges and the methods that have been used to predict neural responses in the visual cortex and whole brain as part of The Algonauts Project 2021 Challenge: "How the Human Brain Makes Sense of a World in Motion".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge