Reinforcement Learning for Adaptive Traffic Signal Control: Turn-Based and Time-Based Approaches to Reduce Congestion

Paper and Code

Sep 01, 2024

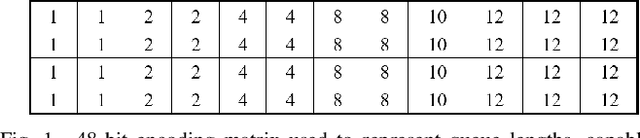

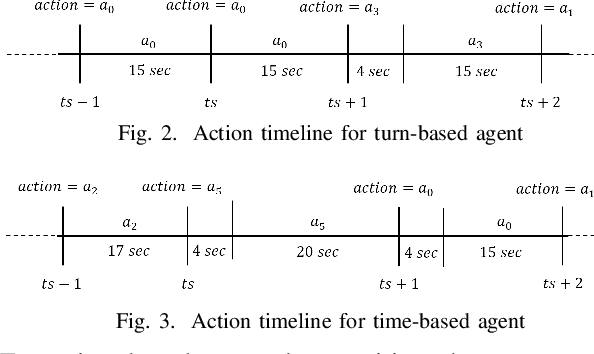

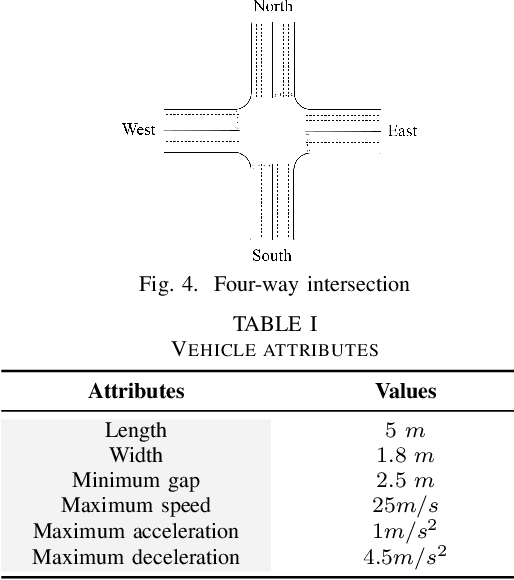

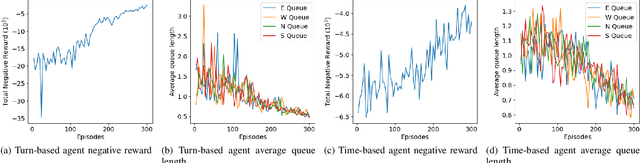

The growing demand for road use in urban areas has led to significant traffic congestion, posing challenges that are costly to mitigate through infrastructure expansion alone. As an alternative, optimizing existing traffic management systems, particularly through adaptive traffic signal control, offers a promising solution. This paper explores the use of Reinforcement Learning (RL) to enhance traffic signal operations at intersections, aiming to reduce congestion without extensive sensor networks. We introduce two RL-based algorithms: a turn-based agent, which dynamically prioritizes traffic signals based on real-time queue lengths, and a time-based agent, which adjusts signal phase durations according to traffic conditions while following a fixed phase cycle. By representing the state as a scalar queue length, our approach simplifies the learning process and lowers deployment costs. The algorithms were tested in four distinct traffic scenarios using seven evaluation metrics to comprehensively assess performance. Simulation results demonstrate that both RL algorithms significantly outperform conventional traffic signal control systems, highlighting their potential to improve urban traffic flow efficiently.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge